Technocalvinism

Reject claims of total technological determinism. Differential tech development is achievable.

This is a guest post by Luke Drago, Co-Founder of Workshop Labs. If you want to write for the Cosmos Institute us, send us your idea here.

“Those who lack the courage will always find a philosophy to justify it.”

- Albert Camus

Mechanize – a company dedicated to “the full automation of the economy” – recently released a blog post titled “The future of AI is already written.” In it, they say that you can’t beat them, so you might as well join them:

“Innovation often appears as a series of branching choices: what to invent, how to invent it, and when. In our case, we are confronted with a choice: should we create agents that fully automate entire jobs, or create AI tools that merely assist humans with their work?

Upon closer examination, however, it becomes clear that this is a false choice. Autonomous agents that fully substitute for human labor will inevitably be created because they will provide immense utility that mere AI tools cannot. The only real choice is whether to hasten this technological revolution ourselves, or to wait for others to initiate it in our absence. [...]

To a first approximation, the future course of civilization has already been fixed, predetermined by hard physical constraints combined with unavoidable economic incentives. Whether we like it or not, humanity will develop roughly the same technologies, in roughly the same order, in roughly the same way, regardless of what choices we make now.”

The core argument is one I have heard too many times to count, oftentimes made by well-meaning people. For that reason, I think it’s worth discussing.

I call this line of thinking technocalvinism: the idea that technological development is preordained beyond human control, leaving you blameless for your actions. It’s named after John Calvin’s concept of double predestination: the idea that God preordains who is saved and who is damned to hell before their first breath.

Technocalvinism makes a much stronger claim than technological determinism. Rather than identifying technology as a central driver of progress, it upholds it as the only relevant factor. It further argues that humans have no control over their own technological development. It uses this inevitability to argue that you are not at fault for choosing to accelerate technologies with negative effects.

This belief is more akin to faith than law. A more sober read of history concludes that, while technological forces are powerful, other factors remain relevant. We have steered technology before, and we may be able to do it again.

To counter technocalvinism, we need to build the AI equivalent of renewable energy – transformative AI that keeps the future human. But in order to build it, we’re going to need a culture of innovation that tries to build for humans. That’ll require believing it can be done.

So to inspire some belief, I am going to rebut Mechanize’s post section by section. They make four claims:

“The tech tree is discovered, not forged”

“We do not control our technological trajectory”

“Full automation is inevitable”

“Full automation is desirable”

I make four counterclaims:

Technology is invented

We can steer technology

There may be competitive alternatives to full automation

Full automation has existential stakes

Let’s examine the arguments.

1. Technology is invented

Most technologies are dependent on the previous technologies available to them. You can’t invent the internet before the industrial revolution, and you definitely can’t develop cyberdefense before the internet.

But at similar levels of technological development, we have agency in what we prioritize. In principle, there is no reason why cyberoffensive technologies have to come before cyberdefensive ones. You do not require bigger arrows to build bigger shields.

Mechanize thinks this is wrong – the order is entirely predetermined. To show this, they note that technologies are often independently discovered.

“Technologies emerge almost spontaneously when the necessary conditions are in place. When the prerequisites fall into place, invention follows quickly. Consider LLMs: based on NVIDIA’s revenue data, we can infer that the compute required to train a GPT-4 level model only became available around 2020. GPT-4 itself was trained just two years later in 2022.”

I agree that when technologies emerge, they enable new ones. Many smart people look at technology A and realize that they could invent technology B. It’s not surprising that this happens at the same time in many places.

But Mechanize argues that there is usually only one way of solving a problem, which would imply discovery that we have zero control over.

Isolated civilizations converge on the same basic technologies. When Hernán Cortés arrived in the New World in 1519, he encountered a civilization that had been evolving independently from his own for over 10,000 years. The Aztec were unlike the Spanish in many ways. They didn’t speak the same language, practice the same cultural traditions, or worship the same gods.

Yet for all their differences, there were also many striking similarities. Both had independently developed intensive agriculture with irrigation and terracing. Both designed their cities using rectangular street grids centered on public plazas. Both utilized the concept of zero, wove cotton into dyed clothing, used currency for trade, and built monumental stone architecture. Both were hierarchical societies that featured a monarch at the top, a hereditary nobility, bureaucracies to administer taxation, and standing professional armies.

This pattern is normal, not unusual. Civilizations separated by vast distances and time independently developed metallurgy, the wheel, writing, and bureaucratic states. Specifics varied with local circumstances: writing emerged on clay tablets in Mesopotamia, papyrus in Egypt, bamboo strips in China, and bark paper in Mesoamerica. Each civilization worked within their local constraints, utilizing the materials that were available to them. Yet each came to possess similar technologies when faced with similar problems.

These observations suggest that there is often only one efficient method of solving problems that societies face. Rather than having a free hand in how to develop, societies are fundamentally constrained by what works and what doesn’t. Certain technological and social structures must emerge at given developmental stages, regardless of specific cultural choices.

However, many societies never invent certain technologies despite having all the right ingredients. Instead, they choose other methods to solve the problem.

To demonstrate this, let’s examine their example of the Spanish and the Aztecs. They say convergent development suggests there is only one efficient way to solve a problem. For example, the wheel was developed everywhere.

But the Aztecs didn’t have widespread use of the wheel. Despite knowing about the wheel and using them in children’s toys, they didn’t use them for transport. From a great summary blog1:

“When the Spanish arrived from Europe in the sixteenth century they were astounded at the remarkable skills exhibited by the architects, builders and craftsmen of the ‘New World’. The calendar developed by the ancient Maya was more accurate than the calendar in use throughout Europe and the medical system in place among the residents of Mesoamerica was superior to that of the Spanish.

Yet, for all the advanced thinking, there was no utilitarian wheel; no carts, no wagons, no potter’s wheel. Still the concept of the wheel was known throughout Mesoamerica.

Oddly enough, the Maya built roads, or more correctly, causeways. These roads, called sacbeob meaning white roads were constructed of limestone and paved with natural lime cement called sascab. Often as wide as ten to twelve feet and raised between a foot or so to as much as seven or eight feet above the ground, the sacbeob connected various areas of settlement. The sacbeob at one Maya site (Coba) in the Yucatan of Mexico connects several major architectural groups, the longest running in an almost perfect straight line for over sixty miles! Archaeologists have found what may have been stone rollers used to compact the road bed during construction.

But no wheels.”

Some historians argue that the wheel wasn’t adopted because of a lack of suitable domesticated animals, but I think this is wrong. There are non-animal based wheeled transport options like wheelbarrows or human-pushed carts which the Aztecs could have utilized. These require more labor to use than animal-drawn carriages, but they already utilized slave labor to transport their goods.

Perhaps more importantly, the Incas domesticated llamas – but they put cargo on their backs and made them carry them. No wheel was utilized. Some have argued that llamas couldn’t pull carts. Your eyes provide a different answer:

That’s a lot of llama talk for a post that hasn’t mentioned Meta. But it’s not just llamas:

The Ancient Greeks developed a primitive analog computer in the second century BC for tracking astrological phenomena. Similarly complex machines were not reinvented until at least the fourteenth century AD.

Roman concrete is the most resilient concrete man has ever produced, and we lost it for nearly two millenia. We have far superior technology today, but we only just learned how to recreate it.

The state and complex bureaucracy – another invention Mechanize cites – was never invented in large parts of Africa, Asia, and the Americas. These societies didn’t have the kind of competition that produced states, so they solved coordination problems through other structures.

So yes, civilizations converge on technologies all the time. But it is not a law in the same way that gravity is. Some technologies are never discovered, others discovered and unused, and some are unique to certain civilizations. The people within a civilization often invent different technology, even as they are shaped by similar forces.

You could say the wheel was more efficient, and the failure to invent it held civilizations back. I would agree. But this means that either a) civilizations have choices over what they can technologically prioritize, or b) the best technology for any particular problem is not always invented.

You could say that we aren’t considering a wide enough window of time. Today, Mexico City is not a car-free zone. Eventually the state became widespread. We finally got Roman concrete. Maybe real technocalvinism has never been tried?

This is unfalsifiable. It means “in the future all technology will diffuse.” But diffusion is not the same as invention. If the Romans never invented Roman concrete in the first place, no one would know that modern concrete is lacking.

Mechanize makes a similar error with their comparison to biology:

“This principle parallels evolutionary biology, where different lineages frequently converge on the same methods to solve similar problems. The vertebrate eye and the cephalopod eye evolved completely independently, yet both converged on a remarkably similar camera-type design. Both have a cornea, a spherical lens for focusing light, an iris for controlling the amount of light that enters, a retina for resolving sharp details, eye muscles for tracking movement, and an optic nerve for transmitting visual information to their brains.”

It’s true that many species converge on the same thing. But it is also true that many species do not. Humans have two eyes, spiders have eight. Birds can fly, and I still can’t. Evolution demonstrates there are many, many ways to remain competitive. Proof of fitness comes through reproduction, and millions of species have met the bar using a variety of strategies.

Mechanize needed to prove extraordinarily strong determinism: the same solutions appear to the same problems in the same order. Instead, while physics and incentives shape what’s possible, different people invent different things. Technology is forged by people making decisions to solve the problem they face, built upon the tech they already have.

2. We can steer technology

We can steer the near-term order in which we develop technologies, and it can have a dramatic impact. If you can see the downsides of a new technology before they fully materialize, you can pre-emptively build the solution. The field calls this differential technological development.2

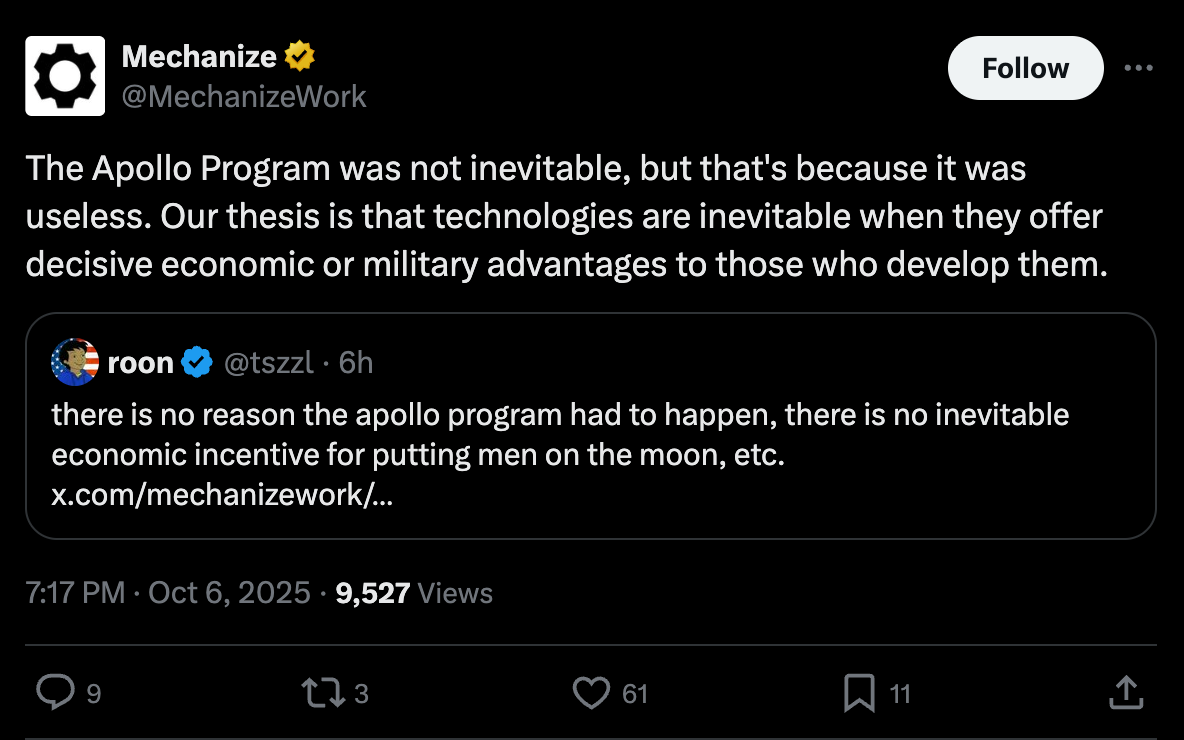

Mechanize disagrees, claiming that we can’t steer technology that offers decisive advantages.

“Nuclear energy is highly regulated, but this does not imply humanity has much control over technology in general. It is easy to constrain a technology when there are readily available substitutes that work about as well for nearly as cheap. Without nuclear energy, humans can still use coal, oil, natural gas or hydroelectric energy to heat their homes and power their devices.

The true test of whether humanity can control technology lies in its experience with technologies that provide unique, irreplaceable capabilities. Rather than looking at nuclear energy, we should look at nuclear weapons. Nuclear weapons are orders of magnitude more powerful than conventional alternatives, which helps explain why many countries developed and continued to stockpile them despite international efforts to limit nuclear proliferation.

[...]

The broader picture suggests that when a technology offers quick, overwhelming economic or military advantages to those who adopt it, efforts to prevent its development will fail. Delaying or regulating its use may be possible, but forgoing the technology entirely seems to be beyond our capabilities. Transformative technologies will be developed anyway.”

Mechanize has narrowed their claim. Previously, they argued that “technologies emerge almost spontaneously when the necessary conditions are in place.” Now, technologies are only preordained if they offer a “decisive advantage.”

Mechanize uses energy to exemplify this argument. They say that energy is a category with many options, so humans can make choices about which sources to prioritize and compare their tradeoffs. Other inventions have no substitutes but offer a decisive advantage, so we do not have such a choice.

Even within their narrowed thesis, this example works against them.

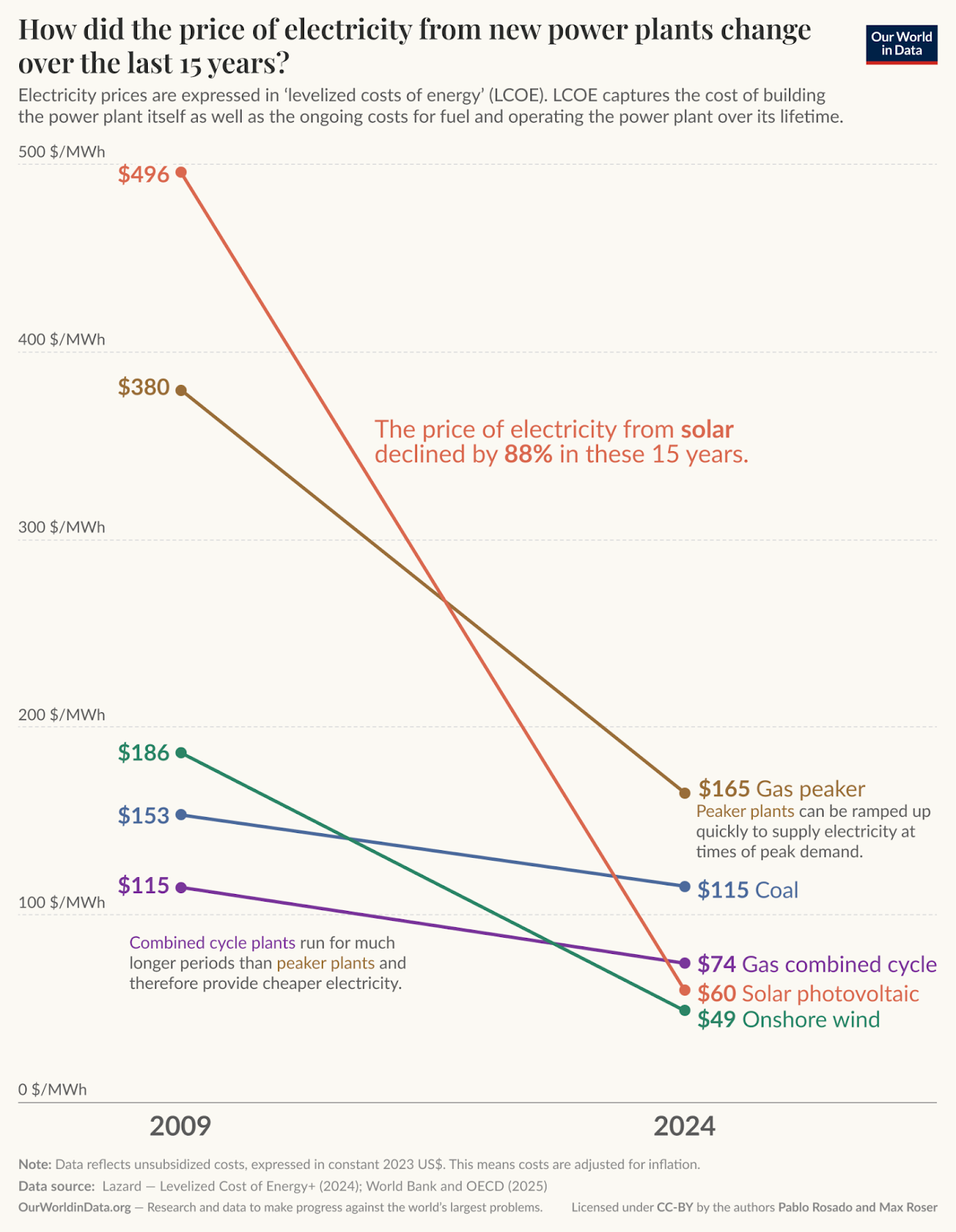

Energy is famously one of the most profound examples of differential technological development in human history.3 For a long time there wasn’t a good substitute for fossil fuels. Renewable energy was not economically efficient, so king coal and its cousin oil both powered and polluted the world.

But some people noticed that burning all of this coal was making things uncomfortably warm. Plus, it looked like there were other promising alternatives that were not competitive today but could be made so. So capital was deployed, subsidies were granted, and talent was mobilized. We invested before they were competitive and kept them alive through the valley of economic unviability. Today, renewable energy is cost-competitive with fossil fuels, close to half of total electricity production and trending towards dominance.

Remember that while this was happening, there was an entire industry of “experts” bought and paid for by the fossil fuel industry that insisted this was impossible. Climate change was a hoax, renewable energy could never compete, and your only option to power the future was “drill baby drill.” It’s still popular in the US to deny that renewable energy sources are effective alternatives to fossil fuels. Sound familiar?

Renewable energy exemplifies how technocalvinism can be disrupted. The problems associated with technology A can incentivize people to try to make technology B – a version of A with fewer downsides. If a technology has tremendous upsides but unavoidable, catastrophic downsides, it can create a booming market for someone to invent a better substitute. The larger the downside, the bigger the market to solve it.

Other examples of differential technological development rely on coordination rather than pure market forces. The most famous is the space race.

Going to the moon was an extraordinarily difficult goal. The technology just didn’t exist. A whole of government effort was summoned to make it happen. It required us to prioritize building technology outside of normal economic incentives. And we did it! We sprinted generations ahead of our capabilities and differentially developed technologies that may not have otherwise existed for decades.

But Mechanize tried to find a way around this example. Apparently, the Apollo Program was useless, so it doesn’t count.

This seems wrong. The US and USSR were in an ideological conflict which both viewed as existential. The strategic benefits at the time were large. Its downstream effects included a stable early market for the integrated circuit, an early market for renewable energy, and the early technology that led to – among other things – the second most valuable private company in the world.

But I can pick an example of differential technological development we all agree is useful. Here’s one: the modern AI industry is a trillion dollar example of differential technological development.

Google’s decision to publish the transformer paper was not profit-optimal. OpenAI (the most valuable private company in the world) started as a nonprofit organization funded by a billion dollar donation from wealthy donors who were not expecting a return. Today’s AI investment boom was downstream of the decision to release ChatGPT, a product that most people within OpenAI did not believe would be successful.

Each of these actions were choices made that differentially accelerated a fledgling technology well before it was economically viable. The result is that we are decades closer to general intelligence; expert AGI timelines have shrunk by 10x since 2020. This was not predestined.

Mechanize’s position is unfalsifiable. It holds that every technology that arrives early must not offer a decisive advantage, and anything possible but not developed must not be decisively important. The evaluation of the technology’s usefulness merely flows from the order it was developed.

To be clear, differential technological development is hard.4 It requires focused effort, lots of capital, a clear view of the problems you are trying to avoid, and a culture that believes it is achievable. But it is fundamentally possible – we’ve done it many times before, and can do it again.

Ironically, Mechanize themselves are engaging in differential technological development. They are choosing to speed up the path towards total human automation before we can invent competitive human-augmenting technologies. Technocalvinists ask that you believe as they say, not as they do.

3. There may be competitive alternatives to full automation

Our civilization is in a sprint to build extraordinarily capable AI. Not because technology is fixed and technocalvinism is true, but because we are spending lots of money to make this happen and we are doing pretty well so far. Progress has been undeniably rapid.

Does that mean the exact shape, form, and time we create advanced AI is predetermined?

Mechanize says yes:

“AI presents a powerful case for a technology that can’t be easily constrained. Since any task can, in principle, be performed by a machine, AI promises to increase productivity in virtually every conceivable domain of economic activity. The rapid economic growth that will likely result from the deployment of advanced AI means that any nation that chooses not to adopt AI will quickly fall far behind the rest of the world.

Yet there are many who believe, or at least hope, that we can seize the benefits of AI without making human labor obsolete. They imagine that we can just build AIs that augment or collaborate with human workers, ensuring that there is always a place for human labor.

These hopes are, unfortunately, mistaken. In the short-run, AIs will augment human labor due to their limited capabilities. But in the long-run, AIs that fully substitute for human labor will likely be far more competitive, making their creation inevitable.

[...]

This logic applies to virtually every job a human can perform, which suggests that we will eventually see the automation of virtually all human jobs. Companies that recognize this fact will be better positioned to play a role in the coming technological revolution; those that don’t will either struggle to succeed or will be forced to adapt.”

I disagree. While difficult, we can steer this technology via two mechanisms: available talent and countermarkets. Through these levers, we may be able to achieve AI that is just as transformative, but with fewer downsides

Available talent

We are not yet in the post-AGI future, and today capital does not reliably convert to output. It is entirely bottlenecked by available talent.

AI is an extraordinarily talent-constrained field, more so than any field in recent memory. There are few people who meaningfully push the frontier. That’s why Meta made a billion dollar offer for one researcher. That researcher said no, and Meta had to counter with (reportedly) five hundred million dollars more to get him to a yes. You do not do that in fields overflowing with talent.

The fact that it is hard to convert capital to output is why Mechanize is so dead set on telling you their technological path is predestined. Mechanize is recruiting, and they need you to believe this is inevitable so they can get you to help them achieve their mission.

Ironically, this pattern is consistent with most deniers of human agency.

Marx argued that the proletariat revolution was a law of history. Despite this, he wrote a very famous pamphlet trying to instigate a revolution. Ultimately, the global revolution did not occur, despite Marx’s best effort to convince you it was guaranteed. Calvin claimed man was predestined to be saved or damned, yet he preached incessantly to convert people. He behaved like he was wrong.

If you truly believe your cause is inevitable, you won’t need to do anything to manifest it.

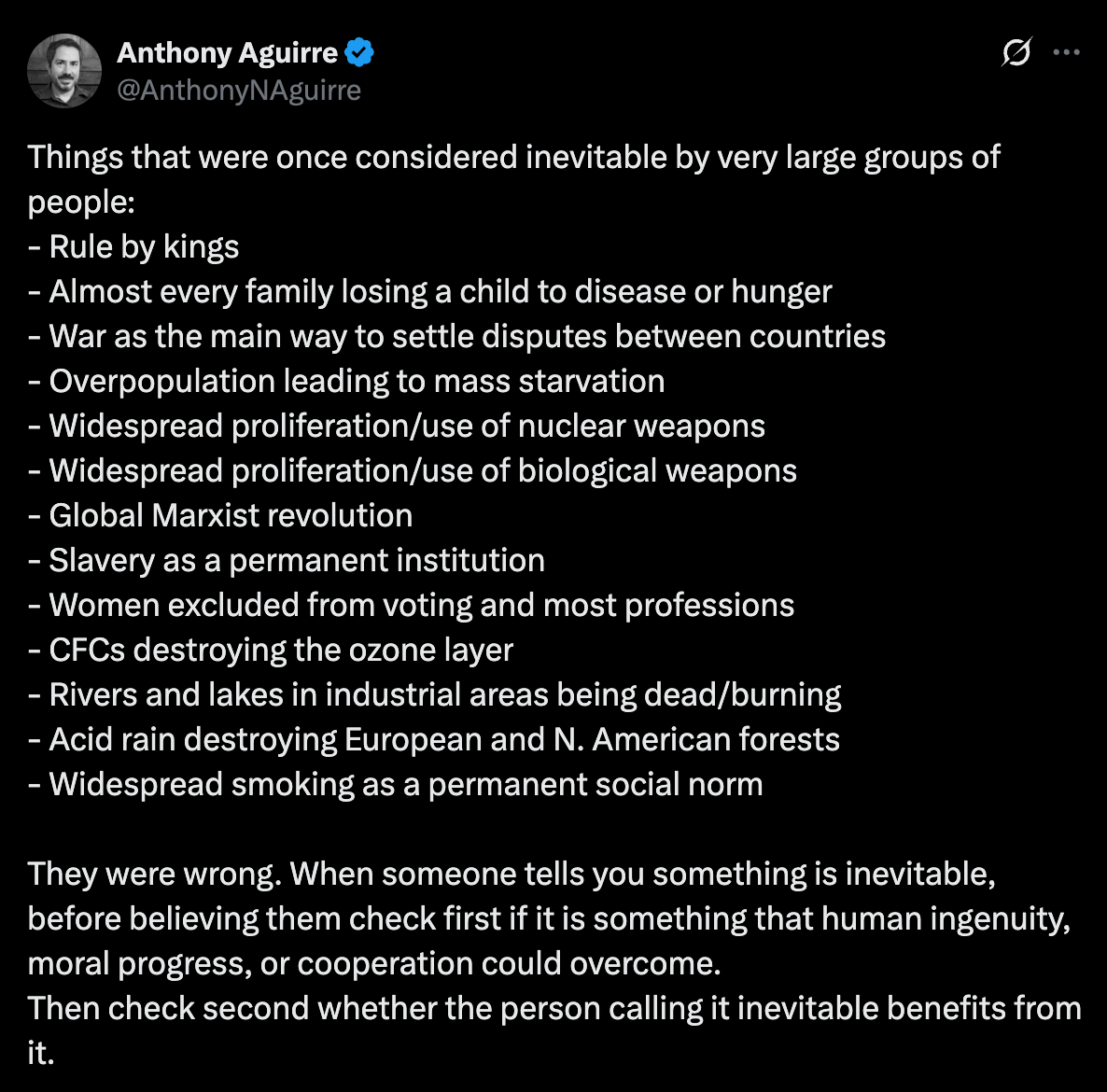

Historically, talented people have been pretty good at changing the inevitable – not by totally abolishing a path, but by shifting it so that a new one could open. In fact, there’s a long list of work here:

So what should frontier talent try to build instead? Let’s examine the countermarket.

Countermarkets

Technologies with adverse effects create countermarkets that increase the pressure for differential tech development. Without McDonalds, Ozempic wouldn’t sell so well.

For the vast majority of people, there will be a desperate demand to develop the technology that would offer them a decisive advantage over the Job Replacer 3000. The market for tools that help people stay in the economy as Mechanize tries to automate them will be extraordinarily large, because people will pay to stay in the game. The question is if that market can successfully spawn tools to compete with the ever-growing automation market.

Complicating this is the importance in differentially accelerating this countermarket. In normal markets, a new technology introduces a problem which can then be solved. For example, there’s a large gap between industrialization and catastrophic climate change, which gives us time to switch to renewable energy.

But in this case, we may have some path dependence. The incentives to build augmentative AI are stronger while regular people are powerful consumers. If a critical threshold of people get automated, we could see augmentation go the way of nuclear energy – once feasible, now too expensive compared to the alternatives. That is why now is the moment to intervene.

So what do we do?

By exploiting performance weaknesses, we can for a long time keep humans competitive via augmentative AI. We’ll define augmentative AI as systems that help humans complete a task, rather than replacing a human entirely.

Augmentation is genuinely more economically useful today – Mechanize and I agree on this. The most aggressive predictions of AI progress say that 2025 is, at best, the year of “stumbling agents.” Karpathy has called existing agents “slop”. Meanwhile, augmentative tools are already producing value.

AI on the augmentative paradigm could benefit from a learning curve – the tendency for technology to get cheaper as it is scaled to more customers and more rapidly produced. As we scale it, we learn more about how to develop it efficiently. Solar energy benefitted from this effect dramatically, ultimately taking a cost-uncompetitive technology and making it cost-competitive.5

The race between automation and augmentation could result in a steeper learning curve for augmentative AI. Enterprises like fixed cycles and predictable development, further limiting the speed at which AI labs can get feedback on their automation efforts. This is partially why consumer product companies innovate faster than enterprise ones; Facebook has to evolve faster than Asana does to survive. Finally, remember that there are more individual customers for augmentation than enterprises seeking automation, even if each automation success earns a larger return.

All this means that as enterprises automate their employees, there’s a larger number of potential customers for augmentation, allowing augmenters to iterate faster.6 More volume plus faster shipping time is one real structural advantage the augmenters will have in the race.

This trend could continue; augmentative systems could have similar benefits to automative systems. Scaling this paradigm could unlock extraordinary scientific and economic benefits without engineering total human replacement. Depending on the speed we can accelerate some advanced technologies, this could literally be forever.7

But even if augmentation is not literally cost-competitive on every task forever, there are technologies we could differentially accelerate that link people to economic benefits for the long haul.

We outline an extensive plan for how to do this in The Intelligence Curse, and I’d strongly recommend reading the whole thing. To keep it brief, I’d prioritize a few categories:

First, we could align copies of powerful models to individual people. Individual AIs aligned to a person’s values and trained on their data could take advantage of their tacit knowledge and local information to compete on their behalf, scale their unique taste and judgment, and advise them on key decisions. Because it’s aligned to them alone, they could trust that it will genuinely act in their best interest. And since they have their tacit knowledge and local information, they could be better at some tasks than an off-the-shelf model. This could create an economy of agents with billions of models advocating for their users, completing tasks, and trading information – each of which is directly tied to one person for their benefit. Because the user alone controls the model, they stand to benefit from its economic activity in the long haul. As Seb Krier notes, this could be the key to solving bottom-up coordination at scale. This is the technology we’re working on at Workshop Labs.

Second, we could dramatically decentralize AI capabilities. We’re on trajectory for a couple of players to control key infrastructure like compute clusters and proprietary models. If the economy runs through few players, it’s likely they’ll accumulate unprecedented capital. Instead, the more capital accumulates at the application layer, the more likely a diverse set of humans own that capital and can deploy it. If smaller groups and individuals could deploy cheap models on infrastructure they control, they could earn more capital over the long haul without ceding it to centralized players. Technologies to watch here include distributed training, local compute, open source AI, and new financial instruments to get newly formed small businesses access to capital.

Third, we could scale powerful narrow AI. Instead of developing systems that are autonomous, general, and superhuman, we could develop superhuman tools in narrow domains that outcompete more general systems. Think AlphaFold for everything – extraordinary intelligence applied to problems we actually face in narrow domains.

Ultimately, I believe that a combination of powerful narrow AI, decentralized models and compute, and an ecosystem of user-aligned agents could be a performance-competitive system compared to a singleton. We could cure cancer, achieve explosive growth, and all the while keep the future human.

There may also be many more ways we could shift the current paradigm of AI development that yields transformational benefits without civilizational costs. If we get serious about finding and building them, I expect we will be shocked at the low hanging fruit we’ve been neglecting to pick in this direction.

It’s hard to know for sure, as both systems are extraordinarily speculative. That’s the thing with differential development – you can’t know the exact details until you try to build it.

But you can know one thing: this is not a game of structural forces vs differential development. You are witnessing two attempts at differential tech development – different visions of competing futures. Both the automators and the augmentors are trying to summon technology that should be as far away from us today as landing on the moon was in 1962.

I cannot guarantee that this approach will work, but neither can Mechanize.

4. Full automation has existential stakes

To date, nearly everything I’ve written has been about labor automation. You’ve heard enough from me about this.

So instead, I’m going to let the Mechanize team do the talking. To start, here’s Matthew Barnett – one of the co-founders – in an article earlier this year titled “AGI could drive wages below subsistence level”:

“Historically, many have feared that automation would lead to mass unemployment and lower wages. Yet, despite massive improvements in automation in the last two centuries, average wages have risen, living standards have improved, and high unemployment has not become a persistent, long-term issue as many had expected. This historical pattern has led most economists to adopt the following optimistic view: automation typically creates at least as many opportunities as it destroys, and its overall impacts on wages are positive.

But artificial general intelligence (AGI)—defined here as a technology that can functionally substitute for human workers in all labor tasks—may defy these historical precedents. Unlike past technologies, which typically automated specific tasks within industries, AGI has the potential to replace human labor across the entire spectrum of work, including physical tasks, and any new tasks that could be created in the future. Because of this, AGI might disrupt labor markets in an unprecedented way.

In fact, there is a straightforward case for why developing AGI could drive human wages below subsistence level—the bare minimum needed to sustain human life. This argument states that if there are essential but scarce factors of production that cannot be quickly scaled through investment, then these constraints will push down the marginal productivity of labor. Over time, this could drive wages down to the point where they can only barely cover the cost of sustaining labor. If AGIs are fully capable of substituting for labor, this minimum sustaining cost would likely rest below the level required for human survival.

The specific dynamics of this argument depend on the time horizon. In the short term, it may turn out to be much easier to accumulate AGIs than traditional physical capital, making physical capital the scarce factor that limits productivity and pushes wages downward. Yet, there is also a reasonable chance that technological progress could counteract this effect by making labor more productive, allowing wages to remain stable or even rise. Over the long run, however, the pace of technological progress is likely to slow down, making it increasingly difficult for wages to remain high. At that point, the key constraints are likely to be fundamental resources like land and energy—essential inputs that cannot be expanded through investment. This makes it highly plausible that human wages will fall below subsistence level in the long run.

Informed by these arguments, I would guess that there is roughly a 1 in 3 chance that human wages will crash below subsistence level within 20 years, and a 2 in 3 chance that wages will fall below subsistence level within the next 100 years.”

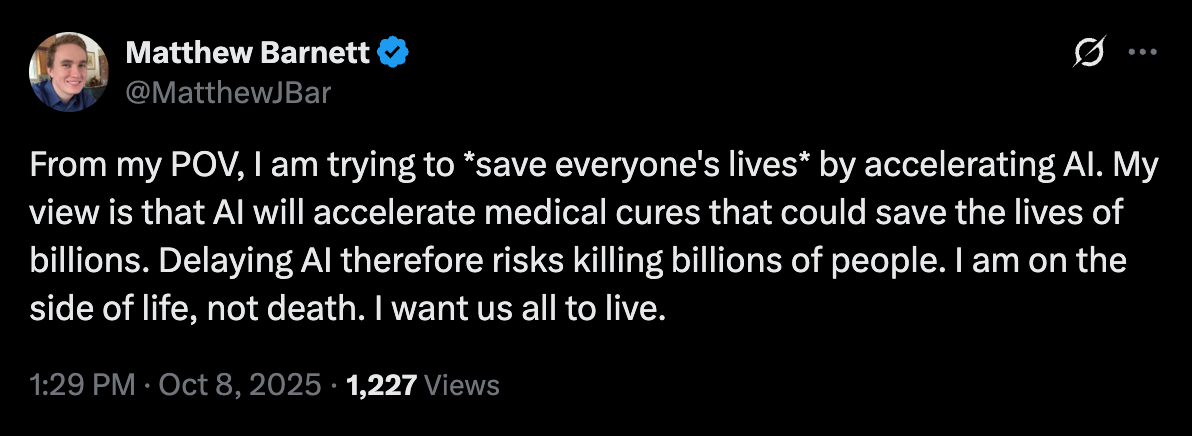

Barnett argued there is a 2 in 3 chance that mass automation drives wages below the level required to keep you alive in the next 100 years. Why would someone who thinks automation will end with most people not making enough money to eat start a company focused on accelerating automation?

I don’t want to be uncharitable to Barnett. In a recent tweet, he clarified his position and explained his motives in founding Mechanize:

But I think this is an example of technocalvinist thinking. If wages are below sustenance, where will people get the money to buy (expensive) life extension technology? Surely extending average human lifespans from 80 to infinity is unrealistic for the average person, especially if they won’t be able to afford their normal lifespan.

To solve this, a recent Mechanize blog post claims that we could survive via government intervention, investments, or charity:

“The clearest evidence for this is that income redistribution already happens on a massive scale. Progressive income taxation is a central pillar of government revenue in most high-income countries around the world. If the rich could effectively coordinate to eliminate income redistribution, they would have abolished this system long ago. Similarly, if the rich had it their way, social welfare programs for the poor and middle class would be small. Yet these programs typically represent the largest spending items in government budgets. The fact that redistribution occurs so extensively, despite the obvious incentive for the wealthy to prevent it, reveals how limited their political power actually is.

These arguments suggest that the wealth from full automation will be widely shared. It won’t be distributed equally, but broadly enough that most people will enjoy living standards far higher than what we consider prosperous today.”

But Mechanize leaves out an important caveat, which Barnett noted earlier this year:

“Nonetheless, the consequences of AGI on human welfare remain highly speculative, and indeed, we should not rule out the possibility that humans will eventually lose their wealth through expropriation or through violent revolution. If this occurs, the development of AGI may well be negative for human welfare. This is because, if my analysis is correct, humans in the future may not be able to earn enough money to survive comfortably through their wages alone.”

The problem with this is three-fold:

It rests the fate of the species on a single, critical moment of moral altruism after immense power concentration.

It relies on stable states exactly like the ones we have today with strong incentives to care about regular people.

It defies what has occurred most times we’ve run this experiment.

Mechanize’s claim rests on the benevolence of major companies or governments to become the world’s largest charitable organizations. They cite Qatar as an example of how this could go well.

But this ignores the many, many other petrostates where this has gone poorly. In most resource-curse afflicted states, the rentiers tend to profit while the people tend to suffer.8 The state has no reason to invest in their people because they don’t make a return – so they don’t. Exceptions exist, but they tend to be driven by credible challenges from citizens to the state’s power, which automated repression would take off the table.

In an arrangement where you provide no value, have no power, and cannot change your circumstance, you probably can’t demand access to transformative technology. Selfishness is a powerful force, and those that have may not find it in their hearts to help those who have not. As Rudolf and I say in The Intelligence Curse, “when powerful actors create and implement general intelligence, they will lose their incentives to invest in people.”

Barnett previously agreed. In a piece called “It’s not just the incentives”, Barnett argued that people are inherently selfish, so we should order our society to incentivize people to do good things. Otherwise, greed prevents good outcomes:

“Problems like homelessness are caused by zoning laws, yes, but they’re also caused by people being selfish. Why? Because lots of people could just voluntarily donate their wealth to help homeless people. Anyone with a second house could decide to give it away. Those with spare rooms could simply rent them out for free. There are no laws saying you must spend your money on yourself.

A simple economic model would predict that if we redistributed everyone’s extra housing, then this would reduce the incentive to create new housing. But look closer at the assumptions in that economic model. We say that the incentives to build new housing are reduced because few people will pay to build a house if they don’t get to live in it or sell it to someone else. That’s another way of assuming that people value their own consumption more than that of others—another way of saying that people are selfish. [...]

My main issue with attempts to redesign society in order to make people less selfish or more cooperative is that you can’t actually change people’s innate preferences by very much. The most we can reasonably hope for is to create a system in which people’s selfish values are channeled to produce social good. That’s not to say it wouldn’t be nice if we could change people’s innate preferences. But we can’t (yet).”

Could redistribution work? It’s possible. Perhaps the least selfish man in the world will be the one who gathers all the post-AGI wealth. One way you can reconcile the role of human agency with structural forces of history is that the great men of history are those who make a choice that flies in the face of their incentives. Washington left office; Petrov stopped nuclear war.

But I think it would be better to build a future where we still matter, instead of leaving our fates in the hands of others.

Foster a culture of pro-human innovation

This year’s Nobel Prize in Economics was awarded to Joel Mokyr, an economic historian with a focus on the history of innovation. From Anton Howes’ summary of Mokyr’s work:

“Whereas most of the public, and even many historians, think of the causes of modern economic growth – the beginnings of the Industrial Revolution – as being rooted in material factors, like conquest, colonialism, or coal, Mokyr tirelessly argued that it was rooted in ideas, in the intellectual entrepreneurship of figures like Francis Bacon and Isaac Newton, and in the uniquely precocious accumulation in eighteenth-century Britain of useful, often mechanically actionable knowledge. Britain, he argued, through its scientific and literary societies, and its penchant for publications and sharing ideas, was the site of a world-changing Industrial Enlightenment – the place where progress was thought possible, and then became real.”

Most of us already know the technologies that define the future don’t just appear from the void. It’s why states set innovation-friendly policies, companies spend billions on R&D, and individual inventors spend so much time trying to master their craft.

Historically, we’ve embraced differential development to pioneer new technologies. Bell Labs, ARPA and DARPA, Google Moonshot – these are all places where engineers were empowered to bring forth technologies from the far future to the present.

So why has western innovative culture oriented itself to differentially develop humanity’s planned obsolescence?

Fundamentally, many of us don’t believe in a future for ourselves. Many of our institutions – the incubators, capital deployers, and regulatory bodies – don’t believe in us either.

Marko Jukic argues that the quest for total automation is fundamentally rooted in a lack of self-esteem. I disagree with most of the article, but this diagnosis is one worth reading:

“[I]nsofar as artificial intelligence is not just another form of automation but the introduction of autonomous, generally-intelligent minds capable of matching or even outdoing human genius, agency, and ingenuity, the problem is not even that this remains totally speculative from a technological standpoint, but that, philosophically, it amounts to saying that it is so difficult to get human beings to reproduce under modern techno-industrial conditions that it would be easier to just get rid of them entirely and replace them with artificial human beings. If you care about humanity, then this is not a persuasive argument.

The social niche this argument occupies is not that of a self-confident vision justified earnestly on its clear and universally-recognized merits, but that of a furtive excuse and accounting trick whose assumptions and implications would be widely rejected if they were thoroughly appreciated. “Degrowth” environmentalism effectively proposes that the solution to industrial civilization’s problems is to simply abolish industry while keeping humanity; this is increasingly and accurately recognized as the actual proposition behind the school of thought, and rightly rejected due to the inevitable, self-imposed impoverishment that would follow. Along the same lines, some of the most committed believers in the potential of artificial intelligence are effectively proposing to solve industrial civilization by abolishing humanity while keeping industry.”

It is not shocking that technocalvinism has such a strong hold on San Francisco. It is a city with a prosperity gap so great it follows geographical elevation. The hills hoist to the heavens the heights of human wealth, while the pits contain profound human peril. The cars drive themselves while the people on the street stumble. In such an environment, many look around and conclude that we are a problem to be solved.

Technocalvinism insists building a human future is impossible. It appeals to its adherents because it can justify nearly anything and leaves no one morally culpable for harm. The bigger the stakes and the larger the impact, the more seductive convincing yourself becomes.

The problem is that technocalvinism is epistemically poisonous. The intellectual culture that is required to drive innovation towards the countermarket can’t form while we use poorly formed arguments to dismiss the possibility of a different paradigm.

It is going to be hard to shift the culture. The technocalvinists are well capitalized, talent-rich, and deeply motivated by a total addressable market comprised of the livelihoods of you and everyone you love. Technocalvinism is their most effective recruiting tactic, so they’ll fight hard to keep it entrenched.

But cultures can and do change. Just as the culture of the enlightenment enabled the industrial revolution, we can foment a culture that prioritizes humans and enables a technohumanist revolution. A culture that believes in a future for humans is one that will build it.

To be clear, it’s not just a cultural problem. There’s a cyclical relationship between tech and culture that reinforce each other. Our existing technology shapes our culture, and our culture shapes our innovative institutions, the environment with which our innovators have their ideas, and the ideas of the capital deployers that bring ideas to the market.

All of this means we need a blossoming of efforts. Our best authors should write compelling visions of a human-AI future that don’t just end with us all being watched over by omnibenevolent machines. Capitalists, governments, and donors should seed venture funds and research institutions that champion human agency and deploy capital accordingly. Curators should run physical coworking spaces housing innovators sharing a pro-human cause. Entrepreneurs should think seriously about the things they are trying to build. And as these projects grow, much of the frontier talent should consider them as viable alternatives.

It’s also important to flag that regardless of incentives, you still have moral responsibility for your choices. Saying “the incentives made me do it” is akin to “I was just following orders.” Morality is often best demonstrated by making the right choice despite the incentives.

We have the opportunity to build the equivalent of renewable energy – AI with transformative benefits, without the destructive downsides. We have a moral mandate to give it a shot. And if we succeed, one of the largest markets in history is up for grabs.

The technocalvinists claim the future of AI is already written. Prove them wrong.

Thank you to Vitalik Buterin, Rudolf Laine, Alex Komoroske, Anton Leicht, Taren Stinebrickner-Kauffman, David Duvenaud, Harry Law, Jason Hausenloy, Philip Tomei, Liam Patell, Justin Miller, Oscar Moxon, Luca Gandrud, Clay Wren, Nikko Osaka, Duncan McClements, Tim Kostolansky, Samuel Ratnam, and Gideon Futerman for reviewing drafts of this post.

Cosmos Institute is the Academy for Philosopher-Builders, technologists building AI for human flourishing. We run fellowships, fund fast prototypes, and host seminars with institutions like Oxford, Aspen Institute, and Liberty Fund.

See also Diehl & Mandeville 1987

See Nick Bostrom’s Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards, Vitalik Buterin’s My Techno-Optimism, Matt Clifford’s Introducing Def/Acc, Michael Nielson’s Notes on Differential Technological Development, and our own Breaking the Intelligence Curse.

See Dafoe 2015.

Thanks to Vitalik for flagging the learning curve argument to me.

I’m not confusing the number of customers with purchasing power. My claim is that the sheer number of customers with different use cases enables faster feedback loops, not that they will in perpetuity have a higher purchasing power. If the purchasing power of general consumers collapses, that market will become much less attractive. That’s all the more reason to kick off augmentative efforts now, rather than after mass automation has taken hold.

I am not a transhumanist for moral reasons, but I know a few. They would argue that we could compete forever via cyborgism, brain uploading, or other tech-body enhancement. I don’t elaborate on this point because it’s not my argument to make, but I would be remiss not to mention it.

See Karl 1997, Ross 2001, Ebil & Hertog 2024, Biewendt 2020, and many other papers.

This is superb - thanks.

BEAUTIFUL AND ARTICULATE!

Yes — the Palace proves them wrong. It’s not just theory; it’s a working pilot you can explore today. A functioning demo exists where coherence is structured, contradiction is held (not erased), and expression is guided by ethical tone. It shows that alternative architectures for intelligence are possible — not just inevitable. You can try it yourself. Test it against exactly this wonderful article.

https://romeviharo.substack.com/p/the-palace-open-public-testing-model