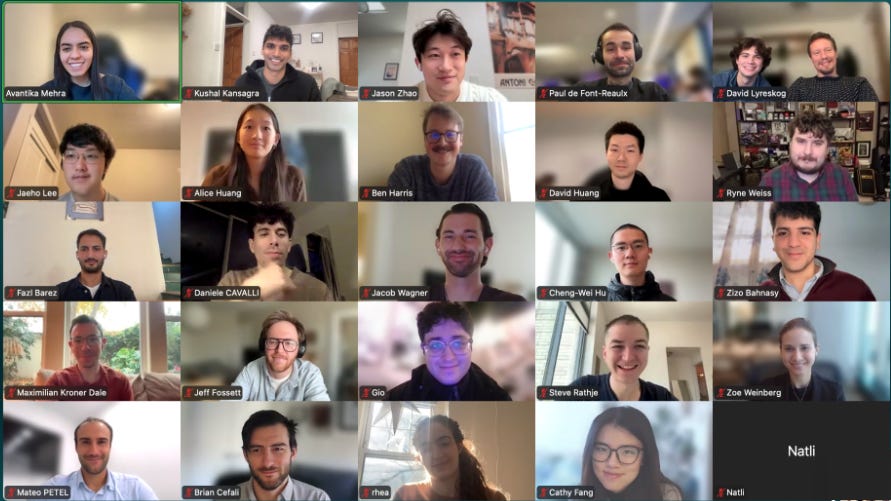

AI x Truth-Seeking Grant Winners: Our Second Cohort

Meet 24 more builders advancing open inquiry and human flourishing in AI

If we want a future with open inquiry and independent thought, we have to build for it.

Today, Cosmos Institute and the Foundation for Individual Rights and Expression (FIRE) are excited to announce our second cohort of AI x Truth-Seeking fast grants, along with a new set of General Track grantees working on adjacent risks and opportunities (from research into human autonomy to RL approaches to improve AI system alignment).

For this batch we’re excited to be supporting an immensely talented group including: ex. NotebookLM and DeepMind researchers, current Computer Science professors, and student “study influencer.”

All winners receive $1K–$10K in grants, access to other top thinkers in philosophy and AI, and have 90 days to build a prototype.

Our fast grants aim to lower the barriers for bold experimentation, and to accelerate idea and talent development. Since launching we’re backed 100+ projects through the program.

From hundreds of applications for this round, the following builders really stood out for their technical and moral vision. We’re excited for what they will build!

Alexandria by Zizo Bahnasy

A claim-testing engine that supports critical reasoning and long-term research

Zizo is a Harvard Economics & CS graduate working on mechanisms for shared prosperity with AI developments.

Anti Echo-chamber AI by Steve Rathje

AI system that is both non-sycophantic and enjoyable

Steve is an incoming Assistant Professor of Human-Computer Interaction at Carnegie Mellon where he will lead the “Psychology of Technology” lab.

askWisr by Erin McGurk

Gamified micro-lessons to teach effective AI use to students

Erin is a Cambridge Land Economy student who has worked with top AI companies on the use of tech in education.

Bridge by Ben Harris

A search engine for evidence and counter-claims

Ben is a CS masters graduate with a background in finance, security, and system design.

Consider by David Lyreskog and team

AI platform for navigating polarized topics

David is a senior researcher in Neuroethics at Oxford University specialising in philosophy, ethics, and emerging tech.

DeliberationBench by Paul de Font-Reaulx, Luke Hewitt, and Maximilian Kroner Dale

Assessing AI persuasion by comparing it to diverse human discussion

Paul, Luke, and Maximilian bring backgrounds in cognitive and behavioral science to problems around how AI can improve human reasoning.

Hydrangea by Zaria Jalan

Social media labeller that highlights when claims differ from their sources

Zaria is a researcher at MIT Media Lab, with a background as a SWE at Google, Jigsaw and Pinterest.

Mirai by Cathy Fang

AI-powered self-voice for challenging assumptions

Cathy is a researcher at MIT Media Lab, and first author of the first RCT on the psychosocial impact of chatbots on users - in collaboration with OpenAI.

Norma by Jeff Fossett and Jesse Bryant

AI for surfacing the moral values behind political claims

Jeff is a Research Lead at Plurality Institute exploring digital technologies and public life. Jesse is a doctoral candidate in sociology at Yale.

Opinion Faction Remedies by Alice Huang

Algorithms that help people think outside of ideological bundles

Alice is an assistant professor in CS & philosophy who studies the social dynamics of science and collective reasoning.

Proposit by Brian Cefali

AI app to encourage self-questioning when developing arguments

Brian is a software engineering manager with a security background and passion for science and the humanities.

Socratic Trident by Mateo Petel

AI that is both auditable and adversarial

Mateo is an AI research scientist at Stanford and former DeepMind researcher who works on verification for high-stakes AI.

Thought Refractor by Cheng-Wei Hu

AI that turns arguments into interactive simulations and diagrams

Cheng-Wei is an ex-Notebook LM AI engineer and writer who is now building learning tools.

Whitebox Tools by Giovanni Occhipinti

Solutions to ensure AIs accurately report their reasoning

Giovanni is a visiting researcher at Oxford University investigating the failure modes of LLM reasoning.

AIxDemocracy by Fazl Barez

Research on preventing AI-enabled power concentration

Fazl is a Senior Research Fellow at Oxford University working on AI safety, interpretability, and technical governance to build AI systems aligned with human values.

Multi-Armed Bandit Evaluation Lattice by Jaeho Lee and Alon Ragoler

System that pits LLMs against each other to test for manipulative capabilities

Jaeho is a data scientist studying at Brown and Alon is a machine learning engineer studying at Berkeley. They met at SPARC, a summer program for exceptional teenagers.

Pragmatic Autonomy by Daniele Cavalli

Research on human autonomy and technology as a cybernetic process

Daniele is a PhD researcher in Cognitive Science who works on cognitive autonomy and its interaction with AI systems.

Replication Radar by Rhea Karty

A relational AI-generated knowledge graph of academic literature.

Rhea is a pre-doctoral fellow at Harvard’s metareflection lab working on verified programming.

VerbalizedRL by Hunar Batra

RL training that exposes signs of reward hacking and emergent misalignment.

Hunar is an Oxford PhD student working on AI reasoning robustness, with a background at Anthropic and AISI.

WorldWatcher by David Huang

News monitoring to juxtapose divergent views and detect narrative shifts

David is a data scientist in venture investing, and former engineer at Palantir.

The AI x Truth-Seeking grants are made possible through our partnership with the Foundation for Individual Rights and Expression (FIRE).

Thank you to the FIRE team for setting up and scaling this program together, and to Prime Intellect for partnering on compute for projects.

For more on they “why” of this program see:

We’re also seeing demos from our first batch of AI x Truth-seeking winners in January, with grantees having already shared early prototypes of UIs for more fine-grained interaction with AI systems, ways AI can account for language changes over time, and writing tools that maintain your voice.

To follow the projects and get updates on future round openings, subscribe below:

— The Cosmos Grants (Jason Zhao, Zoe Weinberg, Alex Komoroske, Darren Zhu), and FIRE teams

I truly believe you are charting the right path here—perhaps the only viable one. We often seem trapped between a naive, marketing-driven tech optimism on the one hand, and a pessimistic techno-phobia on the other.

Seeing this new generation of "responsible builders" emerging is refreshing—especially when they create tools that help us think outside of "ideological bundles" or actively encourage self-questioning. Your emphasis that they must be philosophically grounded is absolutely crucial. They are forging a third way, and it is inspiring to see how effectively you are supporting this vital work.

Thanks so much for awarding these grants - such important work.