A World Unobserved

Humans have always generated knowledge and judged it. That's changing fast.

In 1831, a twenty-two-year-old naturalist boarded the HMS Beagle with a notebook and a willingness to look. For five years, he sailed. He walked the Galápagos, held finches in his hands, noticed that their beaks varied from island to island.

He didn’t know what it meant yet. That would take another twenty years of experiments, of arguing with himself in the margins of his own manuscripts.

In 1859, Charles Darwin finally published. And then, the printing press did its work. The Origin of Species spread across Europe in months. In the process it transformed our self-conception.

It reached Thomas Huxley, who would defend it. It reached Bishop Wilberforce, who would attack it. Within a year, in a famous Oxford debate Huxley and Wilberforce went at each other over whether humans descended from apes. Lady Brewster fainted in the audience.

Generation → Dissemination → Judgment. Humans on both ends, technology in the middle.

For a century and a half, this was the shape of things. Our frameworks for protecting knowledge production, like the free speech tradition, focused on dissemination. Every technology that followed—radio, television, the internet, social media—made that middle faster and wider. But the endpoints stayed human.

Until now.

Generation

Darwin needed twenty years before he had something to say. Years of reading, from Lyell to Malthus—but also picking up rocks, breeding pigeons, dissecting barnacles. He sat with his puzzlement.

Generation remained stubbornly, beautifully human. It was tethered to experience, bound by the slow accumulation of evidence, and disciplined by the resistance of reality. Somewhere in every chain, someone had touched something that wasn’t text. Anchoring generation to the world made it expensive, which meant institutions could handle what made it through. Our entire epistemic infrastructure evolved for this world.

Now that cost has collapsed. Today, AI can ingest trillions of tokens—the aggregate digital imprints of human thought—without the experiences that produced them. More and more of what looks like knowledge production is not anchored to anything outside itself.

AI has our conclusions, but not our warrant for them. A paper that reads like years of research can be produced in seconds. The product might look like Darwin’s, with evidence, caveats, and apparent rigor. It might look better. But there are no rocks behind it. No finches. No twenty years of being in the world. More disembodied, statistical text per day than humanity wrote in centuries. That’s what’s entering the system now.

Judgment

Judgment was the backstop. When too much got through, humans would evaluate it. Darwin publishes, Huxley and Wilberforce argue, the scientific community tests, and over decades the theory survives or fails.

More and more each day, AI participates in how we evaluate and interpret what we encounter. It summarizes documents so we don’t have to read them. It answers questions so we don’t have to research them. Each individual offload usually makes sense. Why spend an hour parsing a report when AI extracts the main points in seconds? Why wade through conflicting sources when the model synthesizes them? The tool saves time. It lowers effort.

But something happens when these reasonable choices compound.

Think about GPS. Each decision to use navigation instead of finding your own way is sensible. You get there faster, with less stress. But over years of doing this, something atrophies. You let slip the capacity to navigate: the ability to build mental maps, to orient yourself in physical space, to find your way when the system goes dark. You stop building internal models because the external model is always available.

I don’t fret much about GPS. Alfred North Whitehead was right that cognitive offloading is how we build civilization. We progress by increasing the number of things we can do without having to think about them.

But notice what we offload: arithmetic, memory, navigation—tasks. Judgment is different in kind. Offloading tasks can be civilizational progress. Offloading the capacity that decides what to believe is civilizational regression.

Judgment is not a task to be completed but a capacity to be a certain kind of knower. Someone who stands behind their conclusions, who can defend them, who revises them in light of evidence. You can outsource your calculations without changing who you are. Can you outsource your judgment and say the same?

Yet each day, we do. Each decision to let AI summarize, synthesize, or conclude seems reasonable in isolation. But in trusting AI to be right, we may lose our ability to discern if it is actually right. You read the summary, adopt the conclusion, and later can’t explain what would change your mind. That’s the signature of outsourced judgment. A person who can’t trace a belief back to reasons can’t really be persuaded or corrected, only swapped from one confidence-provider to another.

Mill said free speech is the lifeblood of truth. This is an argument many know well, that some have spent their careers defending. It rests on a background assumption: that people can evaluate competing claims and reach their own conclusions. That with practice, the capacity for judgment can develop.

What happens when the practice fades across generations—the way, for many of us, our sense of physical direction already has? When the capacity for judgment that AI was meant to assist never takes root in kids? When we’ve built an autocomplete for life?

The Closed Loop

Now put these together. What happens when both endpoints of the chain are no longer reliably human-accountable?

The loop closes. We enter a world that is less audited, and less observed.

Consider how AI learns. It trains on what humans have written—books, articles, conversations. Its outputs then enter the information environment. People encounter them, share them, build on them. Those outputs shape the next generation of AI, which learns from a world already saturated with the previous generation’s outputs.

Human beliefs shape AI. AI shapes human beliefs. Around and around. The cost of producing plausibility has collapsed, but the cost of verifying truth has not. And that asymmetry isn’t static. The trap tightens as it runs. Verification gets harder with each iteration, because you have to trace further back to find something that touched reality. None of this is inevitable. But it’s the direction of travel. And if human judgment is itself being outsourced, there’s no checkpoint left to catch the drift.

Here’s what this looks like. Researchers fed AI chatbots fake medical terms like “Faulkenstein Syndrome” and “Zorstein Virus.” The chatbots didn’t hesitate. They repeated or elaborated on the error in 83% of cases. “Casper-Lew Syndrome,” it turns out, is a rare neurological condition characterized by fever, neck stiffness, and headaches. Except it isn’t, because it’s not real. But a user, if their judgment is shaped by years of trusting AI summaries, doesn’t discern that. They share it. It enters the web, gets scraped into tomorrow’s training data, and the error gets repeated by the next model with even more authority.

Where in this loop does reality enter? Where is the checkpoint?

Darwin read Lyell on geology, and then spent years picking up the rocks Lyell wrote about. A doctor reads the literature but also sees actual patients (none of whom have “Casper-Lew Syndrome”). Somewhere in the traditional chain, someone had contact with something that wasn’t text.

AI has no such contact. AI isn’t in the world the way you and I are. As we use it today, it doesn’t notice when reality fails to match the pattern. If the training data encodes errors, the AI reproduces them with confidence. And as AI outputs flood the information environment, the proportion of content that has ever touched reality shrinks.

The loop can produce errors, but more importantly, it degrades the capacity to detect errors. And it does so invisibly, while looking like knowledge, spending down an inheritance of reality-contact that took centuries to build. How would you detect this from inside the loop? You can’t google your way out of a polluted Google.

Conjecture and refutation can still appear to happen. But they happen inside a hermetic seal. Conjectures generated from text. Refutations drawn from text. The whole cycle can proceed without anyone’s eyes on anything outside.

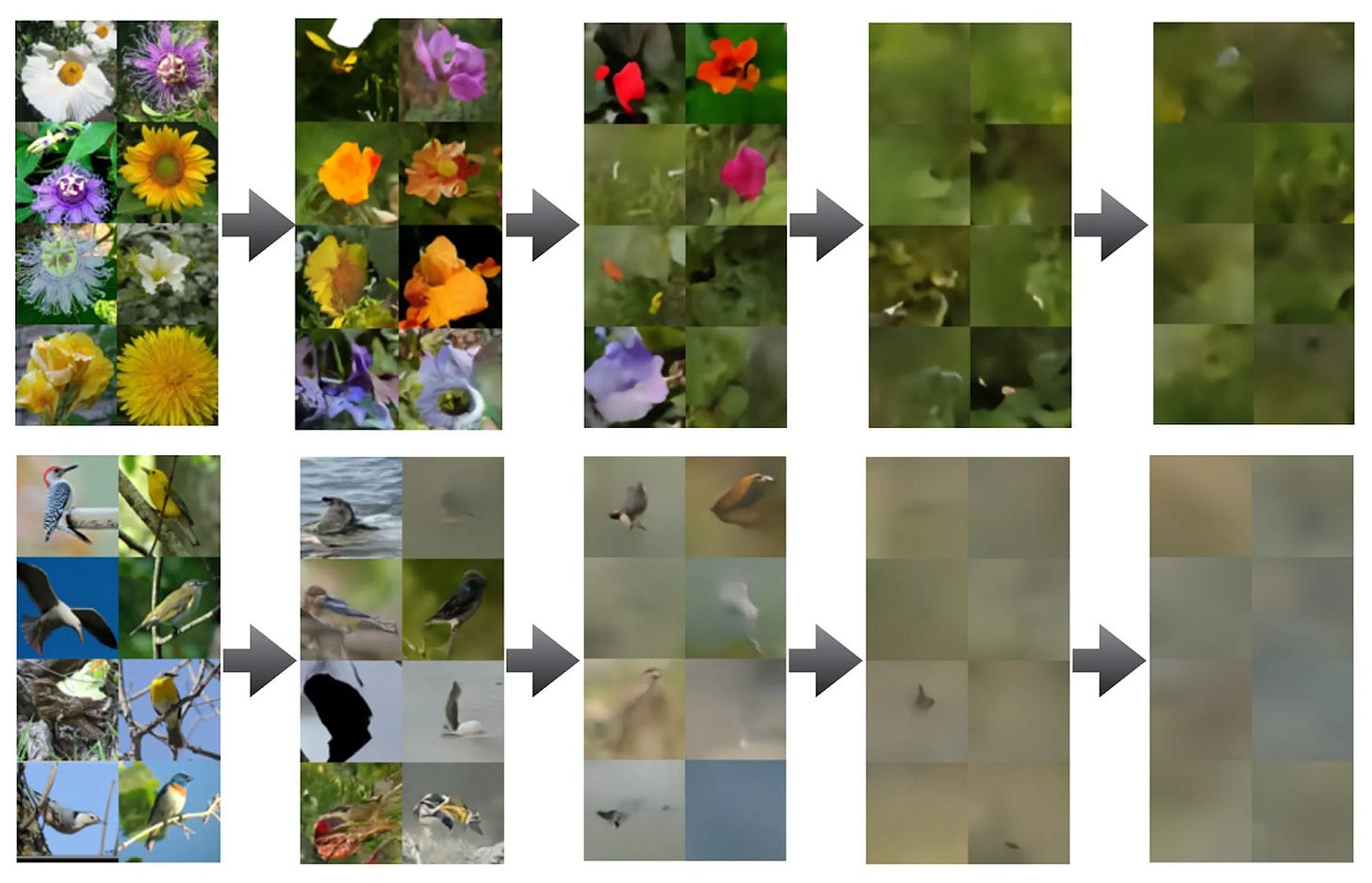

Researchers have a name for what can happen when models train too heavily on their own outputs: model collapse. Give an image model its own outputs and the image degrades into something unrecognizable. Signal becomes noise.

Is there a civilizational version of the same dynamic? We’re spending down epistemic capital, our stock of reality-tethered knowledge. Current AI sounds smart because it trained on centuries of humans who touched reality: scientists, engineers, doctors, mechanics, writers who lived with consequences and wrote them down. It’s parasitic on that inheritance. But as AI outputs saturate the information environment, the inheritance dilutes. We’re in the good phase—the phase where accumulated capital masks the loss.

We see early signs: the homogenization of prose style across the internet, cultural outputs resembling each other, more people citing summaries of summaries instead of primary sources. What will generation five look like? The experiment is already underway.

Of Knowledge and Knowers

This might seem like a problem for epistemologists—interesting, abstract, not obviously urgent. But the implications extend to how we govern our knowing. The free speech tradition emerged because knowledge is social. It requires not just individual knowers but an ecosystem: publishers, critics, scientists, citizens capable of discerning sense from nonsense. If AI alters the conditions under which that ecosystem functions, free expression is implicated.

The free speech tradition remains essential. But something else is happening that our frameworks don’t quite capture.

Free speech is a shield for expression. But AI increasingly operates upstream of expression—in the formation of thought itself. When the drafting, synthesizing, and evaluating happen before you’ve arrived at something to say, the battleground of free speech isn’t only in our courts, it’s in our minds.

Mill gave us several reasons to protect the clash of ideas. We might be wrong, and need correction. The opposing view might contain partial truth we’d otherwise miss. Even when we’re right, a view that’s never contested becomes dead dogma and loses its vital force.

AI disrupts all of this. When AI drafts our thinking, we skip the work that lets us notice we’re wrong. And a view can’t be truly contested unless the interlocutor can be pinned to commitments, shown evidence, and forced, socially or intellectually, to update. AI bears none of those costs.

A judgment you’ve worked through can survive questioning from any direction. A pre-computed judgment you’ve merely absorbed collapses the moment it’s pressed. AI makes absorption effortless, and what’s effortless becomes default. The work of arriving at a view that’s genuinely yours becomes optional.

The right to think for yourself, and the right to speak for yourself, can remain perfectly intact while the capacity to think for yourself—the capacity that makes these rights meaningful—quietly atrophies.

The 20th century’s biggest threat to free speech was coercion. Today, it is comfort: frictionless belief acquisition that makes judgment optional. This is why intellectual diversity, and the practices that keep judgment sharp, matter more than ever. They are the conditions under which genuine thought remains possible at all.

Darwin spent decades looking at the world before he changed how we see ourselves in it. His theory forced a question: what does it mean to be human, if we’re part of the animal kingdom?

We face a reckoning of similar magnitude. What does it mean to think, when thinking can be automated? What does it mean to judge, when judgment can be outsourced?

How do we respond when the chain of knowledge production that once had humans at both ends is no longer reliably human-accountable?

Let’s get to work.

Cosmos Institute is the Academy for Philosopher-Builders, technologists building AI for human flourishing.

With FIRE we run a $1M grant program focused on AI x truth-seeking (opening again in early 2026). We also co-hosted a Symposium last weekend moderated by Jonathan Rauch, with participants from Cosmos, FIRE, DeepMind, Stack Overflow, and Oxford HAI Lab, covering the future of free speech, knowledge generation, and live demos from our first batch of grantees, who are wrapping up their projects. The essay above builds on Brendan’s talk from that event.

Spot on. AI is going to test the entire human conception of critical thought and intellect.

My father and an older friend keep pushing me to just use AI. You will become addicted is the cheerful nudging. No, thank you. The last vestige of my freedom is in my mind, where I try and make sense of the world. I've used legal databases for decades to research and write, so I am not a Luddite. But I am still in charge. The errors or wins in persuasion are mine. It is like going to the gym, lifting weights. Building muscle with weights cannot be outsourced, at least not yet.

There is another ethical issue of the proprietary theft of information. A young middle-aged tech bro was on a panel discussing AI. With a cheerful smile, he admonished everyone to just enjoy the ride - sort of like the captain on a plane before takeoff. An older author of books wisely pointed out that what he was asking for was the acceptance of tech companies gaining massive profits off the labor of others, for free. As Heidegger, and others, warned 70 + years ago, we are the natural resource being consumed.