Introducing the First Cohort of AI x Truth-Seeking Grant Winners

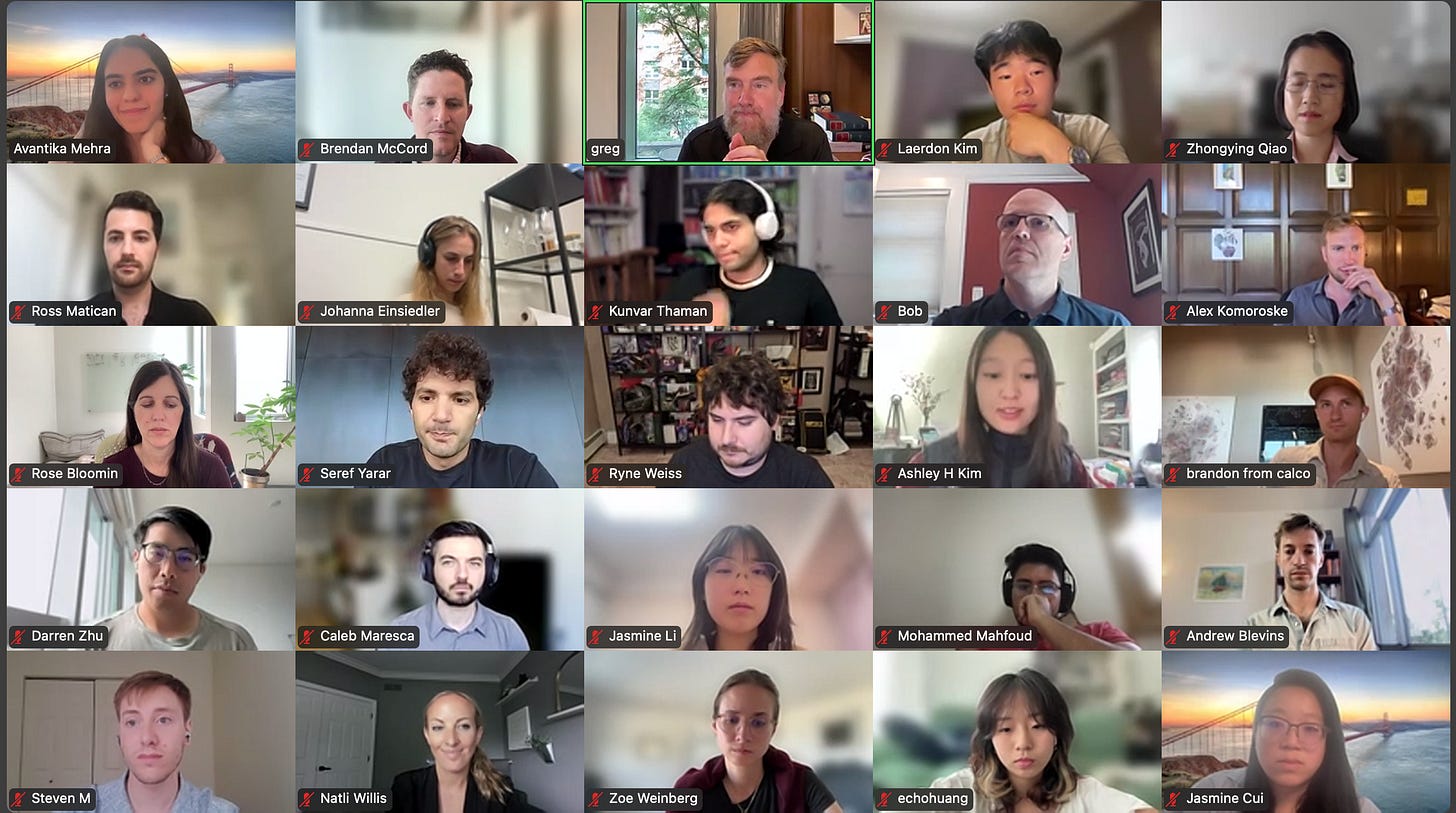

Meet the 27 builders advancing open inquiry and intellectual freedom in AI

AI should empower open inquiry rather than suppress it.

We want to support a future where AI works as a partner in truth-seeking. Where it surfaces counter-arguments, flags open questions, and prompts us to check the evidence. Where errors are chipped away and knowledge grows. Where our freedom and habit to question stays intact and even thrives.

That’s why in May, Cosmos Institute and the Foundation for Individual Rights and Expression (FIRE) launched a $1M grants program for AI projects that promote truth-seeking.

From hundreds of applications, this first cohort was selected for clarity of purpose, technical feasibility, and a commitment to truth-seeking.

The winners will receive $1K–$10K in grants, access to other top thinkers in philosophy and AI, and have 90 days to build a prototype. Top projects will be showcased at an upcoming symposium in Austin, TX.

Our goal is to lower the barrier for bold experimentation, and to accelerate idea and talent development.

Meet the cohort:

Argument Debugger by Nada Amin

AI that finds gaps in reasoning and suggests repairs

Nada is assistant CS professor at Harvard and lead of the Metareflection lab exploring easier, faster and safer programming methods.

Authorship AI by Ross Matican

A writing tool that keeps you from over-relying on AI

Ross is an ecosystem builder and former reporter for The Information currently focused on tech that expands human agency.

BotBicker by Bob Devereux

A truth-seeking tournament for LLMs, judged by people

Bob is a startup founder, former Amazon Scale Engineer, and free speech advocate looking to improve civil discourse.

BridgingBot by Rose Bloomin

A tool to help make contentious online conversations more productive

Rose is co-founder and director of Plurality Institute, where she leads research and field-building at the intersection of AI and human cooperation.

CoTShield by Jiawei Li

A tool to surface hidden logic and deceptive reasoning in AI systems

Jiawei is a Network Security PhD student with research interests in machine learning, game theory, and robust multi-agent systems.

Concept Tracer by Šimon Král

Open-source browser extension that helps examine a concept’s history

Šimon is an AI researcher focused on AI preferences and dialogue systems, with experience across research groups and startups.

Effortful by Jasmine Li and Laerdon Kim

Writing tool to challenge ideas via visualization and drawing connections

Jasmine and Laerdon are AI engineers working on agent evaluations and model honesty, currently studying computer science and english at Cornell.

Identifying AI Politics by Jared Amende with David Rozado

Evaluating and adjusting AI responses to political orientation tests

David is a computer science associate professor who has done pioneering work investigating political ideologies in LLMs. Jared is his student.

Improving AI Explanation by Rubi Hudson

Developing formal incentives for AI to be concise yet accurate

Rubi is an economics PhD student at the University of Toronto working on the intersection between microeconomic theory and AI alignment.

Index Network by Seref Yarar

A discovery protocol for surfacing competing perspectives

Seref is co-founder of Index Network with experience leading engineering teams and building across AI, media, and marketing.

Language Evolution in AI Societies by Ivar Frisch

Analysis of if and how cooperative language emerges in AI-AI interactions

Ivar is an AI research engineer exploring the philosophy of technology, with experience across industry and academia.

Martingale Training by Tianyi Alex Qiu

AI models that avoid belief lock-in via new RL training approaches

Tianyi is an AI alignment researcher working on human truth-seeking, and moral progress with multiple paper awards at NeurIPS and ACL.

Metalens by Johanna Einsiedler

Open-source scientific research platform with AI and human checks

Johanna is a postdoctoral researcher in social data science and machine learning, studying real-life social networks and decision-making.

Perplex by Steven Molotnikov

Surfacing hidden goals in closed AI systems using open models

Steven is a MIT-affiliated AI alignment researcher who previously engineered fusion energy machines, robots restaurants, and rocket engines.

Policy Explorer by Caleb Maresca

An AI tool for analyzing policy impacts and assumptions

Caleb is a PhD student researching the intersection of AI and economic systems, with experience as a research scientist intern at Upstart.

Probing AI Truth-telling by Mohammed Mahfoud

Measuring AI truthfulness by looking at model internals

Mohammed is an AI researcher working on safe-by-design AI and large-scale model safeguards and collaborating with Mila and Anthropic.

Procedural Knowledge Libraries by Hamidah Oderinwale

Git-like tools to track the reasoning and thought processes behind code

Hamidah is an entrepreneur with experience in policy at Institute for Progress, engineering at Amazon, and collective intelligence research at Topos.

Remarker by Jonas Kgomo

An open-source Wikipedia for exploring truth-seeking in LLMs

Jonas is founder of the Equiano Institute, with experience in open-source software development and interdisciplinary research.

Reward Hacking Benchmark by Kunvar Thaman

Open benchmark that tests LLM agents for reward hacking and deception

Kunvar is an ML engineer who has worked on top-tier public AI evaluations, measuring benchmark inflation, and mechanistic interpretability.

Socratic Mirror by Zhongying Qiao

AI thought partner that interrogates and invites deeper thinking

Zhongying is a senior software engineer on GitHub’s security products team with a background in computer security and network systems.

Socratica by Jasmine Cui

AI librarian that conducts research and helps people ask better questions

Jasmine is an NBC reporter and data journalist, fellow at MIT’s Algorithmic Alignment Group, and MATS scholar in mechanistic interpretability.

SPACE Terminal by Andrew Blevins

Open-source interface for multi-perspective conversations with AIs

Andrew is a writer and designer focused on tools, protocols and games that support human agency, with a background in media and research.

The Automated Philosopher by Simon Henniger

Reasoning and discovery approaches to spur inquiry vs AI answer-finding

Simon is an incoming Harvard computer science PhD with a philosophy background, focusing on reflective and genetic algorithms.

TLM-1B by Brandon Duderstadt

AI that tracks how word meanings change over time

Brandon is founder and CEO of Calcifer Computing, founding CEO of Nomic AI, and Johns Hopkins researcher focused on computational semiotics.

Truth Terminal by Christian McGrew

Public ranking of LLMs on intellectual honesty and dissent tolerance

Christian is co-founder of BridgeUSA, a network promoting constructive discourse across 75+ universities, and Fulbright Scholar in Taiwan.

TruthLens by Michal Wyrebkowski and Antoni Dziwura

A tool that detects censorship by comparing different AI model outputs

Michal and Antoni are AI researchers with backgrounds in economics and previous projects spanning AI legal tools and data center research.

When Reasoning Fails by Christopher Merck

Tracking where and why reasoning breaks down in chain-of-thought

Christopher is a physicist, ML engineer, and smart home hardware co-founder with a current focus on AI reasoning failures.

These grants are made possible through our partnership with the Foundation for Individual Rights and Expression (FIRE).

Thank you to the FIRE team for setting up and scaling this program together, and to Prime Intellect for partnering on compute for projects.

To follow the projects and get updates on future rounds, subscribe below:

Applications for the next round of AI for Truth-Seeking grants are now open:

— The Cosmos Grants (Jason Zhao, Zoe Weinberg, Alex Komoroske, Darren Zhu), and FIRE teams

Great list of people for great purpose. Writing about the blend of philosophy, ethics, curiosity and technology. Might give the next cohort a try!

Such fascinating projects! Hoping to read more about their development on here