What Will You Build For: Zoe Weinberg

Investing in human agency.

Every builder’s first duty is philosophical: to decide what they should build for. This series asks 9 questions to founders who are building towards their vision of the human good.

Today’s guest is Zoe Weinberg. Zoe is the founder of ex/ante, an early-stage venture fund backing technology that advances human agency and digital freedom. She talks more about her work in this short video.

Before ex/ante, Zoe worked on ethics and policy issues at the National Security Commission on Artificial Intelligence, Google AI, and the World Bank.

1. What are the core questions or beliefs driving your work?

It’s tempting to fall into standard narratives of technology as bringing either salvation or apocalypse. It’s all a bit Manichean—a black-and-white view of technology as good or evil, often in those stark terms.

I’m more interested in questions of how technology can subtly reshape human agency, human rights, and human flourishing, all depending on how it’s built. Technology can democratic or authoritarian. It can expand freedom or constrict it. It can advance open societies. Or not. There’s very little that’s preordained. There’s very little that’s not ultimately in our hands as builders, funders, and users.

2. What future are you building for?

Through my work at ex/ante, I’m trying to contribute to a future that centers individual autonomy, freedom, and choice, counteracting a digital world that feels increasingly circumscribed and controlled by governments and a handful of large tech companies.

This means a world where systems make knowledge and capability more accessible, and where the friction and difficulty that often accompanies deep understanding isn’t eliminated, but is part of what creates meaning and joy.

One version of this was captured recently in the Resonant Computing Manifesto. This suggested that artificial intelligence can give rise to digital experiences that nourish, rather than drain, us if we build with intention and thoughtfulness. I’m building for the future where innovation isn’t just for innovation’s sake, but toward the creation of a healthier and more free society.

3. What commonly held belief in the tech community do you believe is wrong?

In the tech world I often hear people say that technology is value-neutral: it’s how people use technology that can have good or bad consequences. I disagree.

Technology is purpose-built, and therefore is embedded with intent. What’s its purpose? How’s it meant to be used? Technical architecture always embodies a set of values-laden choices: the design of protocols, interfaces, and systems that don’t just enable certain actions but actively shape the distribution of power, the visibility of alternatives, and the very modes by which users understand their options.

There’s a million little decisions that go into product- and company-building that ultimately have a moral valence. To make some of those decisions easier, ex/ante and the Ford Foundation released Builder Blueprints, a library of templates and documents for start-ups to raise the bar in terms of privacy and user rights. It’s just one piece of the equation, but it is all part of the foundation on which a company is built.

Calling technology value-neutral is a cop-out.

“Technical architecture always embodies a set of values-laden choices.”

4. What are your main philosophical influences?

I will never forget the first time I read Thomas Kuhn’s Structure of Scientific Revolutions. I am often reminded that basic assumptions about the world that we take as “science” and “fact” are in reality socially and historically-contingent. Science and progress are hardly linear, and new (often contrarian) perspectives are critical to improving our understanding of the world. In the context of my work at ex/ante, I often think about what technological developments on the far edges of the horizon could substantively change my mental model of how the world works or contribute to a true paradigm-shift.

Many of the other thinkers and writers that have influenced my work are represented in the Tech for Human Agency Library, including John Stuart Mill, Alexis de Tocqueville, Michel Foucault, Marshall McLuhan and many others.

5. What does human flourishing mean to you?

For me, human flourishing is rooted in human agency: the ability to make substantive decisions about one’s life. It requires a social structure that enables individual freedom, individual judgment, and pursuit of individual purpose.

“Individual” is the key word. Any infrastructure (technological, cultural, political or otherwise) that imposes uniformity diminishes the conditions for human development and progress. Self-authorship emerges from the struggle to understand and solve genuinely hard problems, to navigate moral complexity, to confront perspectives that are at odds with one’s worldview. On a societal level, human flourishing is necessarily plural.

The question for our moment is whether technology can expand or contract the possibilities for self-authorship and whether we can design these systems to preserve the productive friction that gives rise to human flourishing. On an individual level, human flourishing is less about achieving any particular state of happiness and more about having the capacity for self-authorship.

6. What’s one book you’ve read recently that you’d recommend?

Second Life: Having a Child in the Digital Age, by Amanda Hess.

I recently became a parent, so I appreciated this memoir about the ways that the digital world has shaped one of life’s biggest milestones, by an internet culture critic for The New York Times.

7. What’s your most irrational belief?

My irrational prediction of an irrational belief is that we will see the emergence of a religious movement centered on worship of AI and superintelligence. A great awakening for the 21st century.

8. What’s the most interesting tab you have open right now?

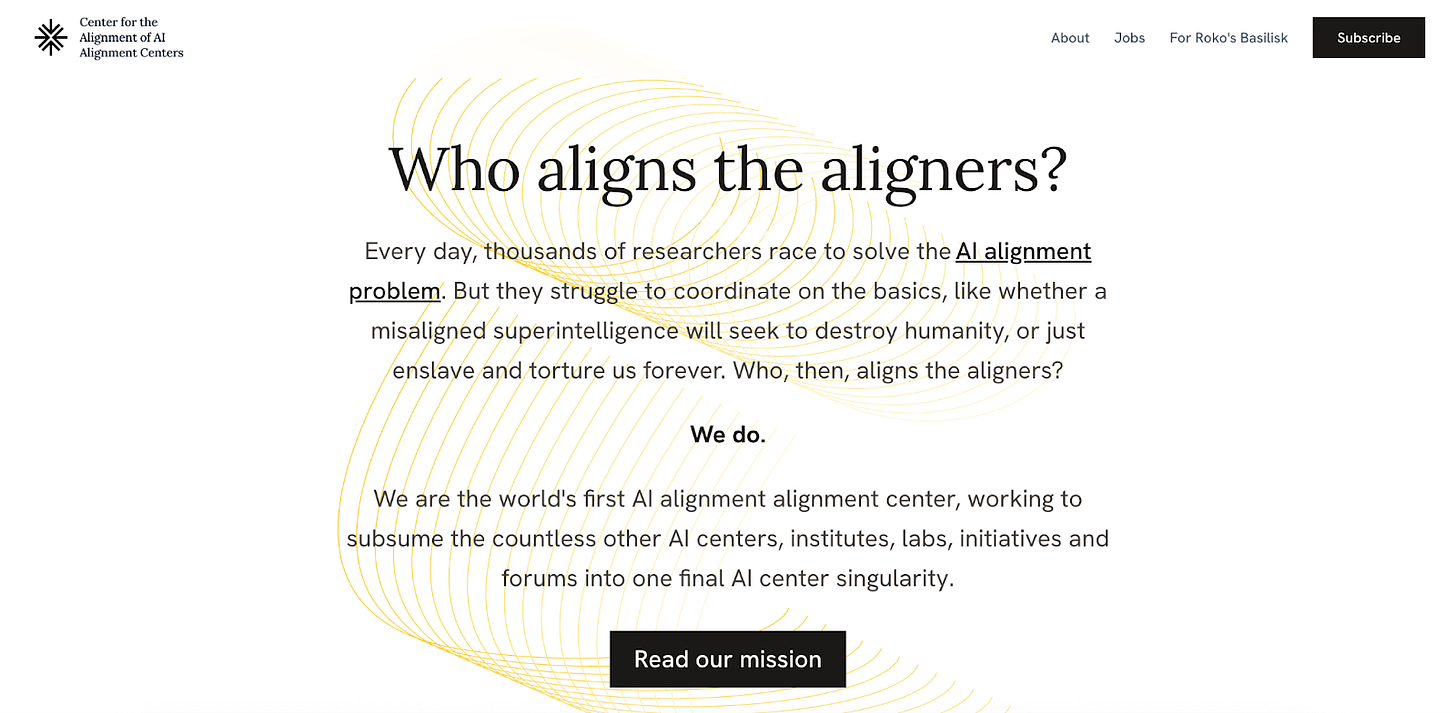

The website for the Center for the Alignment of AI Alignment Centers. I am a big believer in satire and humor as a method for sense-making. I also think creator Louis Barclay is hilarious and brilliant.

9. Who is one writer or thinker today who you think is underrated?

An idea that is underrated is the Free-Market Welfare State. It’s a concept described by Samuel Hammond in 2018 policy paper, suggesting that a strong social safety net can encourage more innovation, risk-taking, and entrepreneurism.

By giving citizens guaranteed support, such as health insurance and retirement security, we remove the pressure for protectionism and intervention during periods of technological and economic disruption. At the same time, we empower individuals to take risks, knowing they and their families won’t slip through the cracks. The proposal doesn’t fit in a neat partisan box, which perhaps is why it does not get nearly the attention it deserves as an economic policy solution.

Innovation and progress depend on the willingness of individuals to take risks, so policies that make taking a leap of faith easier are ones I’m likely to be in favour of.

Thanks to Zoe for answering “What Will You Build For?”

To get in touch, find her on LinkedIn or at ex/ante.

This is the second installment in this interview series, see our first interview with AI Underwriting Company co-founder Rune Kvist.

Cosmos Institute is the Academy for Philosopher-Builders, technologists building AI for human flourishing. We run fellowships, fund prototypes, and host seminars with institutions like Oxford, Aspen Institute, and Liberty Fund.

If you’re someone who thinks deeply, builds deliberately, and cares about the future AI is shaping—join the Cosmos network.

To nominate someone for “What Will You Build For?” leave a comment below, or send us a DM.

Excellent pushback on the value-neutral tech myth. The idea that technical architeture carries embedded intent makes sense when you think about defaults, friction points, and what gets surfaced vs buried. I've seen this play out realy at companies where early design choices around data access shaped culture way more than any mission statement ever could.

Thank you so much for this. I have been involved in similar chain of thoughts for the past few months and really resonated with most of the stuff here.

I believe that the progress of humanity needs more than just technology. What we need are ideas of social change, economics, governance and politics IN ADDITION TO the technology to understand where we go forward.

What is the ultimate aim of humanity? What is the direction we are heading towards? Having an understanding of where we wanna go further gives us the understanding of what needs to be built. And the answer to this “where” is built on pillars of social science, philosophy, economics etc. Technology has the ability to take us to this “where” but the very definition of this is built on these pillars.