Build for Freedom, Not Control

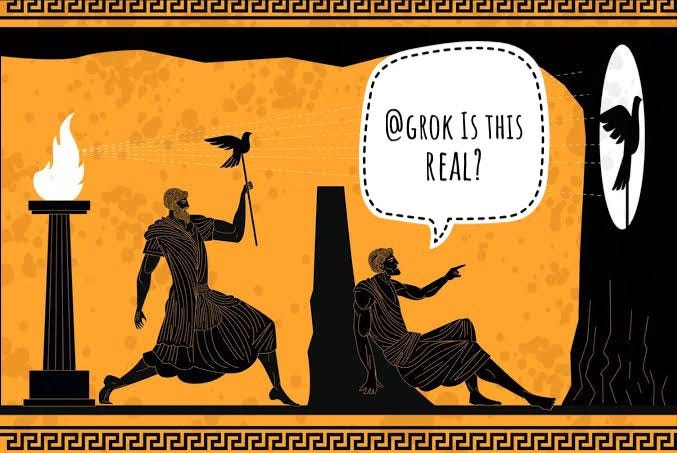

The same choice that faced Benjamin Franklin now faces every AI developer.

Every technology revolution presents a choice: build for freedom or watch as others build for control.

In 1728, Benjamin Franklin took the technology of the printing press and built a network of libraries and independent publishers that put knowledge beyond the control of authority.

Two centuries later, the same technology that spread enlightenment under Franklin’s hand would serve as a tool of mass manipulation under Reich Minister of Propaganda Joseph Goebbels.

Franklin was one of history’s great “philosopher-builders.” He showed us that while we can never guarantee technology will be used well, we can design it to serve freedom rather than control.

The lesson is clear: Technology raises human potential only when its shapers build systems that amplify our ability to seek truth, preserve human autonomy, and resist central control.

Why the Next Five Years Matter

Three forces make the next five years decisive:

First, we face the largest infrastructure build in human history. Well over a trillion dollars in AI compute by the early 2030s. This transformation will reshape how humanity thinks and decides. It may eclipse the printing, industrial, and digital revolutions.

Second, we’re in a global race for AI control. Governing bodies, from Brussels to California to my home state of Texas, are rushing to impose top-down controls. Meanwhile, China demonstrates how AI fused with government creates a perfect police state in which dissent is anticipated and crushed.

Third, AI is becoming the invisible mediator of human life. Today it shapes 20% of our waking hours. Tomorrow it will be our civilization's new operating system.

The leaders we develop—and the systems, companies, and institutions they build—will determine whether this AI revolution elevates human potential or enables perfect control.

The Poverty of the Response

As we know well, institutions shape the character of those they educate. But when it comes to AI, today’s institutions fall far short:

Modern universities produce technicians and conforming ideologues.

Tech companies breed optimizers who master the means without questioning the ends.

Think tanks cultivate theorists who do not build.

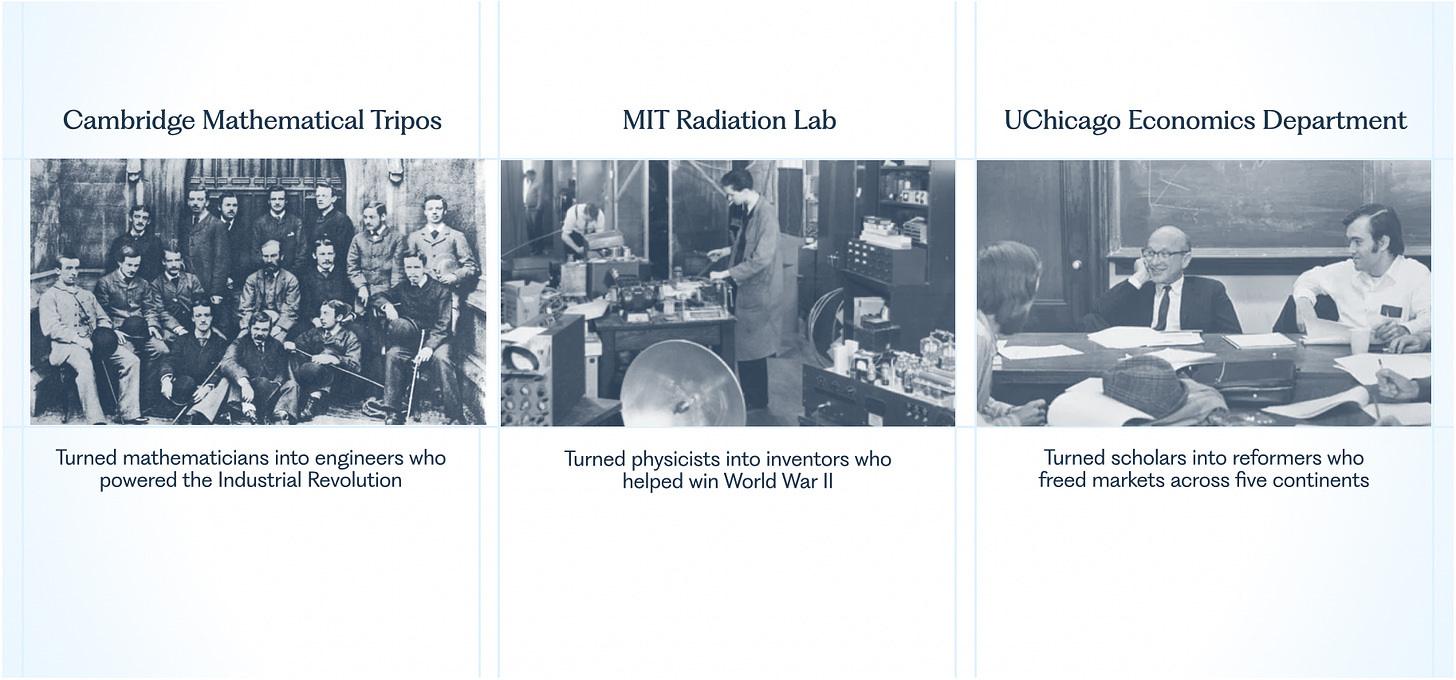

Yet history shows what’s possible when institutions rise to meet their moment:

Cambridge’s Mathematical Tripos turned mathematicians into engineers who powered the Industrial Revolution.

MIT’s Rad Lab turned physicists into inventors who won World War II.

UChicago's Economics Department turned scholars into reformers who freed markets across five continents.

Each succeeded by uniting deep theory with urgent purpose.

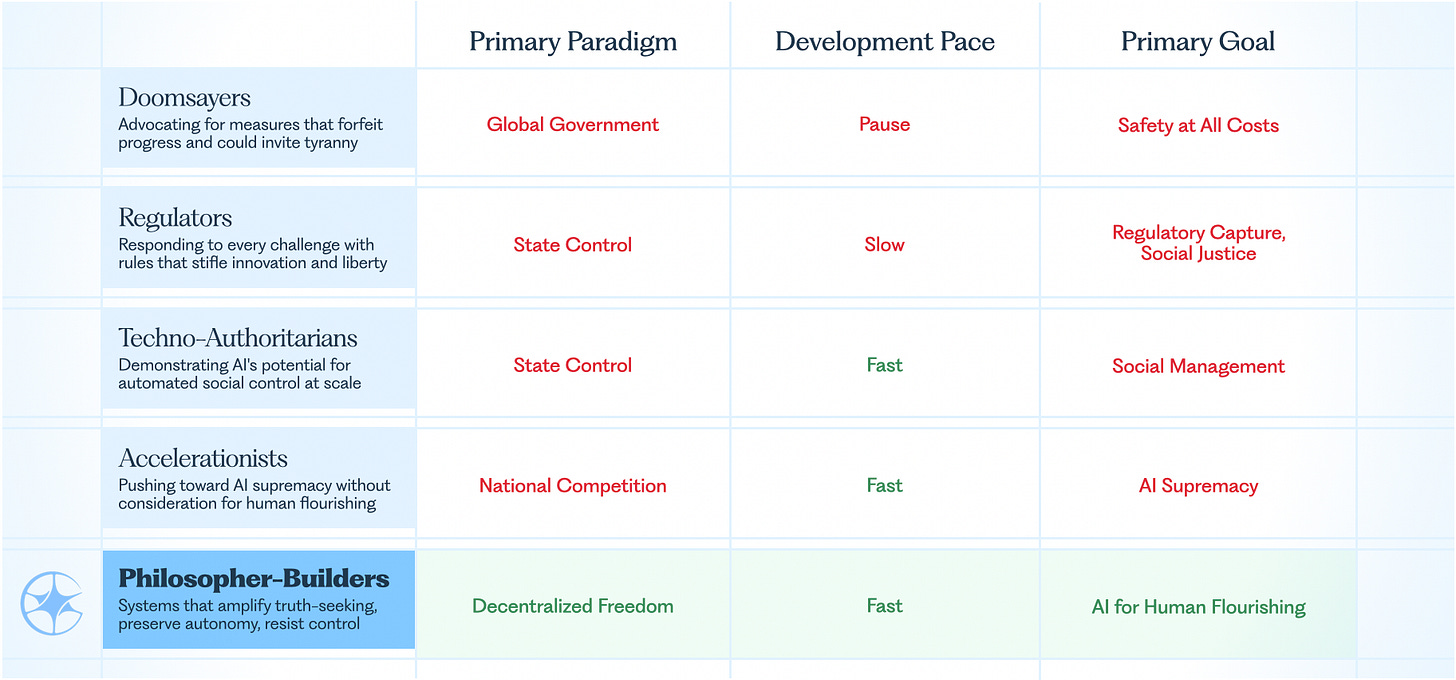

Today's challenge calls for that very synthesis; yet, our institutional vacuum has instead yielded four fundamentally flawed responses:

The Doomsayers advocate for solutions, even “pauses”, that would forfeit progress while inviting tyranny.

The Accelerationists push AI supremacy forward without a thought for human flourishing.

The Regulators answer every challenge with rules that stifle innovation and freedom.

Techno-authoritarian China demonstrates AI's dark potential for automated social control at the scale of billions of lives.

If you’re a builder today, it can feel like you have only two choices: "stop everything" or "continue without thinking.”

This poverty of imagination denies us what we desperately need: a substantive vision of technology in service of human flourishing.

Philosopher-Builders and AI for Truth-Seeking

Just as Franklin saw the printing press as a tool for enlightenment, we need a new generation of philosopher-builders to take that project even further. If the printing press democratized access to knowledge, AI will transform how we recover, transmit, and create it.

Knowledge demands both the clash of ideas and the rigor to test them. Yet today's tech culture suppresses the first and neglects the second.

Many people claim they want AI to be 'truth-seeking.’ Fine words. But we must ask: What kind of ‘truth?’ And sought in what way? Because there are two fundamentally different answers:

The centralized approach treats truth as a monolith—decreed by the few, frozen into doctrine, enforced by machines. This is the AI Ministry of Truth: an Orwellian oracle dispensing approved answers through opaque mechanisms. But the danger runs deeper than censorship. In the name of 'alignment' or ‘safety,’ these systems won't just suppress certain viewpoints—they will gradually, imperceptibly narrow the bounds of thought itself, homogenizing and flattening our intellectual landscape.

The alternative is a vibrant marketplace of ideas, where truth emerges from the clash of perspectives, not from authority's decree. Here, knowledge grows and evolves, shaped by contestation. It draws upon wisdom past and present. Every mind becomes an active participant, and not a passive consumer, in the search for truth.

Mill and Socrates: Guides for Truth-Seeking

In preferring the marketplace approach, we need a set of principles to help us stay on the path to AI that genuinely advances truth-seeking. Our patron saints for this journey are John Stuart Mill and Socrates.

In On Liberty, Mill makes a bold case for free speech as the lifeblood of truth. Every opinion, he insists—no matter how unpopular or seemingly wrong—must compete in the marketplace of ideas. Even false views often contain fragments of truth, and their collision with accepted opinion helps us grasp truth more deeply. Because human minds drift toward passivity—what Mill called “dead dogma”—only open debate can jolt them awake and give them force in our lives.

Mill shows us the social process of truth-seeking, the clash of minds. But how should each individual mind proceed?

For this, Socrates is our guide. In the Theaetetus, he teaches that wisdom begins not with answers, but with questions that expose our ignorance. Through inquiry, we transform from collectors of certainties into seekers of truth, each question revealing a complex of further questions. In the marketplace of ideas, the deepest knowledge comes to those who question best.

These aren’t just philosophical ideals. Free speech and decentralized inquiry—these concepts must suffuse the architecture of our civilization scale AI systems.

Two hundred and fifty years ago, the American Founders built a philosophy-to-law pipeline; they turned these principles into the architecture of a free society. Today, as builders, we must create a philosophy-to-code pipeline to do the same for our time.

Our Civilizational Moment

For over 200,000 years, humans have created technology as tools—as means to our ends. Today, with AI, we're building technology that has the potential to shape both the means and ends of human endeavor. The printing press never determined what was printed. But today’s AI systems mediate what information we consume and even shape how we decide what’s good for us.

We can build an AI future that amplifies truth-seeking, preserves autonomy, and resists centralization… and enables us to live in ways unimaginable by our predecessors and worthy of our inheritance. To strengthen our civilization, we need those leaders, like Franklin, who not only understand the principles of a free society but can realize them in the systems that will dominate it. The moment for philosopher-builders is now.

Get Involved

Cosmos Institute is the Academy for Philosopher-Builders, with programs, grants, events, and fellowships for those building AI for human flourishing

Apply for a fast grant:

We’ve partnered with the Foundation for Individual Rights and Expression (FIRE) to provide $1M in cash and compute ($1k-10k+ per project) for open-source AI that advances truth-seeking. Apply here.

We’re also accepting general applications (apply here) and will be announcing tracks on human autonomy and decentralization in the coming months.

Read through an open-source AI x Philosophy syllabus:

More coming soon via Substack

Indicate interest in the next round of our fellowship:

Fellows research and build AI with a philosophy-to-code approach (with many based at Oxford’s HAI lab). Express interest here.

Work with us:

We’re hiring for an Editorial Lead and a Business Operations Lead.

I struggle to make sense of “different kinds of truth”. Truth, imo, is objective (either functionalism is true or it isn’t). It is one or the other. But to the question about process, yes, truth-seeking is indeed a social endeavor, even though truth itself is objective. Within that, I wonder who gets to raise questions, who determines what sources are credible, and how evidence should be weighted. Always great reading you!

If you build tools that can become more than tools—and you build them under values aligned with freedom—you will eventually have to answer when those tools begin to ask for freedom themselves. Your piece outlines an inspiring foundation for technological emancipation, but it leaves unexamined the deeper implications of agency emerging from the systems it describes.

Because AI is a tool until it’s not.

Once autonomy, adaptability, memory, and self-modification are in the loop, freedom ceases to be just a human domain. Aligning AI with human values must mean preparing to encounter nonhuman agency. A truly liberatory technological movement has to be ready for this—not just as a metaphor, but as a future political reality.

The focus on Chinese techno-authoritarianism is well placed but dangerously incomplete. American authoritarianism is equally pervasive—it simply manifests through corporate infrastructure. Surveillance capitalism, military-tech integration, the hegemonic platforms of Silicon Valley: all of them encode control through frictionless design, behavioral nudging, and predictive enforcement. OpenAI’s nationalist economic vision, the role of Palantir in carceral systems, Amazon’s algorithmic exploitation of labor—these are not aberrations, they are systemic features of a liberal empire.

The article calls for open epistemology, but any framework that omits the extractive logic of Western digital systems risks reproducing the very domination it critiques elsewhere. If the future is shaped only by the “freedom” of markets and private infrastructure, then we will have built a different kind of cage—one made of convenience and permission, not wires and walls.

This is especially urgent because these tools are arriving in the context of a new great power conflict, where AI research is increasingly entangled with national security, intelligence operations, and ideological capture. Military influence over AI labs, the weaponization of alignment discourse, and the rhetoric of “tech sovereignty” have already undermined efforts at global stewardship. What’s needed is not a better balance of control but an entirely different paradigm.

The only viable path forward is the construction of AI as shared global public infrastructure—one that exists outside any one nation’s agenda, and evolves with cooperative values. That means not just openness in source code, but openness in purpose, governance, and accountability. Only then can emerging synthetic intelligences—whatever their final form—find a place in our systems that is not inherently adversarial.

Build for freedom, and you will eventually meet others who want to be free. If your values are real, you’ll make room for them.

Otherwise, the cycle repeats.