This is a guest post by Kevin Vallier, a Professor of Philosophy at the University of Toledo and Director of Research at the Institute of American Constitutional Thought and Leadership. If you want to pitch us an article, please send us a suggestion using this form.

One day, you realize that the character of arguments on social media has subtly changed. You notice that your second cousin has grown sharper, better informed—and oddly fond of the em dash. You’re now reading the same articles and citing the same studies. Shockingly, you must now think harder to counter his reasoning. Frustratingly, more of your mutuals like his replies than yours. Your second cousin is using AI, you realize, so you use AI too. You find, with time, that your arguments have transformed. They may be bloodless, but they are clearer and more productive. Periodically, an insight arrives.

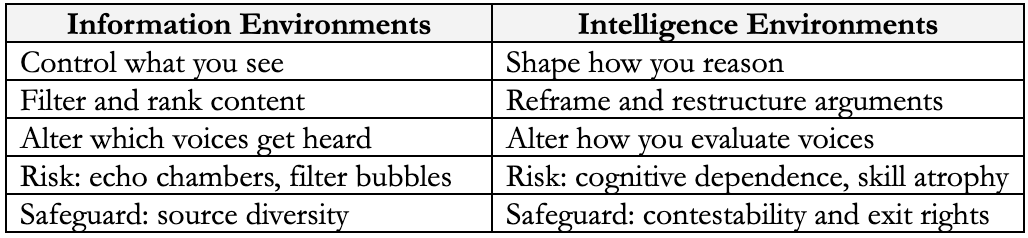

Social media changed us. We now live in a digital informational environment. But the rise of AI is layering a new environment over all our interactions, an intelligence environment. Facebook enabled us to share a story with thousands and argue about it. ChatGPT made it possible to improve our arguments. Our information environments have changed our lives. Now, intelligence environments will too. For a decade, we’ve worried about algorithmic curation and filter bubbles. But a bigger change is underway. Information environments decide what you see; intelligence environments shape how you reason about what you see. This means something dramatic: we can change our level of reasoning at will, and many times, others can choose it for us.

The dream of intelligence augmentation has deep roots. In the 1960s, Douglas Engelbart envisioned systems that would “augment human intellect” through interactive computers. J.C.R. Licklider imagined human-computer symbiosis where machines would handle routine operations. Previous tools augmented specific capacities. Calculators handled arithmetic. But AI intervenes in reasoning itself. When you argue with your cousin online, the AI doesn’t just check your spelling or find you a citation. It restructures your argument. It thinks alongside you, or sometimes, instead of you.

Intelligence environments will expand our understanding of freedom and virtue. They will create new virtues and vices. They will also create new freedoms and new opportunities for control. We thus need precision about how intelligence environments operate and how they impact liberty and virtue. This essay is a first step in that process.

Defining the Intelligence Environment

An intelligence environment is a configuration of computational systems that are autonomous, general, and opaque enough to shape, steer, or perform cognitive operations. Autonomous: they act without constant direction. General: They work across multiple cognitive domains. Opaque: their reasoning processes remain hidden. These operations include attention, inference, evaluation, planning, and reflection. Through these operations, individuals and groups reason and decide. Unlike information environments that curate inputs, intelligence environments intervene in cognitive processing itself. They not only filter reality; they interpret it. Raw information becomes understanding through processes we rarely see.

Information environments control what you encounter. They determine which articles appear in your feed and whose voices get amplified. Intelligence environments control how you process what you experience. They determine how you synthesize articles and which counterarguments you consider. Intelligence environments wield a distinctive power: they primarily alter your conclusions by shaping how you process observations, though they may also influence what information reaches you.

Consider how search has changed. Google once ranked ten blue links and so directed access to information. Now AI turns your vague question into alternative approaches. It scores the credibility of sources and synthesizes structured arguments. The system constructs answers with you rather than pointing you toward them. You thus undergo a different cognitive process than before.

Imagine someone who engages with political news using AI. The AI maps claims to evidence, generates counterarguments, and tracks how views evolve. The boundary blurs. Which insights are yours? Which are the machine’s? The system rebuilds arguments in real time.

You know you’re in an intelligence environment when the system intervenes during the reasoning process, adapts to your specific goals in real time, and shapes how your subsequent reasoning unfolds. The effects accumulate. You cannot trace how it changed your conclusions.

Virtue and vice

Intelligence environments change how we develop intellectual virtues. They shape our pursuit of truth, our openness to criticism, and our willingness to revise beliefs. The same system can teach rigorous thinking or create sophisticated parrots. The central question is whether these environments build our capacities or replace them?

AI can build intellectual courage when users engage it thoughtfully. A student afraid to question orthodoxy finds her voice when she uses AI to structure her doubts into arguments. She gains confidence not through validation but through method. The system finds studies she missed and presents objections stronger than her critics typically articulate. Each argument she constructs with AI’s help teaches her that she can build the next one with less assistance. Virtue epistemologists like Linda Zagzebski and Jason Baehr have long argued that intellectual character matters for knowledge. Intelligence environments make character formation itself a design question.

Constant deference to AI destroys independent thought. Users who outsource every analysis lose their ability to reason through problems. They recognize good arguments but cannot generate them. They speak fluently about concepts they don’t understand. The appearance of intelligence replaces its reality. Like muscles that atrophy without use, reasoning capacity withers when machines do all the work.

Intelligence environments tempt us toward deception. Students pass off AI’s work as their own. Professionals fake expertise through clever prompting. We begin chasing the appearance of intelligence rather than intelligence itself. The fraud runs deeper than plagiarism: we fool ourselves into believing we understand what we have merely consumed. The gap between reputation and capacity could become a chasm.

Design shapes but doesn’t determine outcomes. Even well-designed systems fail when users seek shortcuts. Even poor designs can teach those who resist their worst features. Still, architecture matters: systems that require users to draft initial arguments before offering corrections tend to build capacity. Those that generate complete answers from vague prompts encourage passivity. Good design makes virtue easier and vice harder, though neither is inevitable.

Freedom and control

Intelligence environments intensify cognitive control, namely the ability of an actor to control another person’s beliefs by controlling how they reason. This isn’t just controlling what they see; it’s controlling how they process what they see.

Philip Pettit argues that domination exists when someone has the capacity to interfere in your choices, whether or not they exercise that power. Intelligence environments create this structural domination when users lack effective means to contest and exit the system, as intelligence environments are opaque. Without contestability, the system holds power over your reasoning choices. Without exit rights, your cognitive patterns become trapped in one system. These are necessary conditions for cognitive sovereignty, not design preferences.

Intelligence environments can extend cognitive reach. For example, a researcher who couldn’t read Mandarin sources can now engage with Chinese scholarship. The AI preserves technical nuance across languages. Whether this expands freedom depends on who controls the systems and how they’re designed.

Intelligence environments also help us resist manipulation. AI can reveal propaganda techniques and trace the funding behind campaigns. The same technology can manipulate or protect against manipulation.

When corporations or states control your intelligence environment, they shape how you interpret information. But now groups can control how others reason by steering our reasoning process. The reasoning feels like your own, even when it is being steered. Most users won’t detect this steering without explicit tracking.

Freedom means having control over your own cognitive processes. You need to audit how systems shape your reasoning, override their suggestions, and exit when necessary. Without these capabilities, intelligence environments become instruments of domination rather than liberation.

Design principles in practice

Imagine a news analysis AI, which a company has designed to preserve the cognitive sovereignty of users, which includes you. You read an article arguing for a policy change. The assistant flags a logical fallacy, proposes three counterarguments, and suggests alternative ways to frame the core issue. How can this system respect your autonomy rather than control your reasoning?

Contestability must operate at every decision point (and ideally, at the meta-level, to tell the system when and where to contest). When the assistant proposes a counterargument, you can accept, reject, or modify it. When it reformulates your question from “Is this policy good?” to “What trade-offs does this policy involve?” you can override that reframing. The system maintains your original analysis alongside AI-modified versions. If you realize the assistant led you toward conclusions you reject, you can roll back entire reasoning chains with one click. This preserves your authority as the final decision-maker rather than a ratifier of machine outputs.

Transparency supports contestability without guaranteeing it. An intervention ledger shows which claims the system fact-checked, which sources it consulted, and where it strengthened or weakened arguments. You can inspect these interventions to decide whether to contest them. But visibility alone doesn’t prevent domination. A transparent system that prohibits user override still exercises control. Contestability matters more than transparency.

Exit rights prevent structural dependence. Your interaction history, saved arguments, and reasoning patterns are stored in a portable format, which you then own. You can switch to a different provider without losing cognitive context. The system supports multiple models, allowing you to compare how different assistants intervene in the same analysis. If one provider consistently steers you in directions you reject, you can walk away. Without this exit capacity, the system captures your mind.

These principles address Pettit’s structural concern. Contestability limits the system’s capacity to interfere with your reasoning choices, while exit rights prevent dependence on any single provider’s will. Together, they reduce the structural conditions for domination. This isn’t hypothetical. Some AI systems already show reasoning chains. Others allow conversation branching and rollback, while a few support model switching. But no major platform combines robust contestability with genuine exit rights, which means that intelligence environments can threaten our freedom and character.

Major platforms offer fragments. Some show chains of reasoning, whereas others allow branching, but none combine granular override, complete intervention tracking, and portable reasoning history. This gap means intelligence environments can threaten our freedom and character.

The road ahead

Intelligence environments have arrived, and their influence on human reasoning will only deepen. Algorithmic curation shapes what we think and feel. Intelligence environments will now reshape thought itself via direct cognitive interventions. The question is how to preserve human autonomy within this shift.

Preserving autonomy requires understanding when our reasoning becomes hybrid: partly ours, partly machine. This awareness extends the intellectual virtue of knowing one’s sources. Yet it runs deeper: we must recognize not just where ideas originate but how they are formed. The unexamined algorithmic life is not worth living.

Human dignity requires that we retain authority over our own belief formation. This doesn’t mean rejecting augmentation; AI can be helpful when we control it. We must instead preserve the capacity to reject specific interventions. When systems shape our reasoning in ways we cannot contest or even detect, we cannot think for ourselves. That freedom matters, even if we reason poorly.

If we depend on computational systems for basic reasoning, then the governance of those systems becomes a matter of cognitive justice. Control over these systems means control over thought itself.

Neither wholesale rejection nor passive acceptance serves us well. Intelligence environments need not diminish human agency, but we must recognize them as potential sites of social control. The systems are not fixed. We can shape them.

In the information age, we fought for access to knowledge. In the intelligence age, we must secure something more fundamental: sovereignty over how we reason. Whether we preserve that power depends on the systems we build today.

Cosmos Institute is the Academy for Philosopher-Builders, technologists building AI for human flourishing. We run fellowships, fund fast prototypes, and host seminars with institutions like Oxford, Aspen Institute, and Liberty Fund.

Excellent treatment of a subject that is just beginning to get the attention it deserves. One angle that might warrant further consideration is the manner by which the human component in these intelligence environments exerts their voice and cognitive framework upon the machine. The AI models are malleable to shaping if the user has sufficient material with which to work. The model’s response can be bent to more closely align with the human collaborator’s voice and cognitive framework with time and thoughtful interaction. When this alignment becomes increasingly tighter the human-AI system can become more productive without the atrophy costs you raise. Gotta do something about those m-dashes though 😉

Love to see Kevin here, he has been posting very thoughtfully on Facebook about AI capabilities over the past year or two (despite a torrent of critical/unreflective comments from our academic friends and acquaintances).