A Return to Wholeness

AI forces a return to “natural philosophy,” just as science once needed to leave it

This is a guest post by Irina Haivas, a venture capital investor in AI and deep tech. She has a background across investing, strategy, medicine and policy. If you want to pitch us an essay, send us a suggestion using this form.

Also last week we launched applications for our Fellowship at Oxford’s Human-Centered AI Lab. Here are more details on the Fellowship; apply by Dec 6th.

A few weeks ago, chasing my youngest daughter through London’s Science Museum, I glimpsed the words “Natural Philosophy” on a glass panel.

I stopped mid-stride. Here was a reminder that the science we know today, including AI, began not as fragmented disciplines, but as a unified field of inquiry that blended physics and moral reasoning, mathematics and metaphysics, observation and meaning.

The Fracturing of Knowledge

The transition from Natural Philosophy to modern science was a rupture that unfolded across generations.

Aristotle’s Physics wove together observations about motion with teleological (that is, purpose-oriented) questions about natural ends and human good. Medieval scholars like Thomas Aquinas saw no contradiction between theological inquiry and natural investigation as paths to understand the divine order. In the Renaissance and early Enlightenment, Galileo, Descartes, Newton, Leibniz were all polymaths who moved fluidly between what we today call hard sciences, humanities, and even art.

The 17th century brought a methodological revolution. Francis Bacon’s Novum Organum (1620) championed empirical observation and inductive reasoning over philosophical speculation. Descartes’ method of systematic doubt gave priority to what could be clearly and distinctly perceived. London’s Royal Society, founded in 1660, adopted the motto Nullius in verba (“Don’t take anyone’s word for it”), signaling an emphasis on experimental results over inherited authority.

By the 19th century, this fracturing was accelerating. Universities organized knowledge into distinct departments. Physics separated from natural philosophy in the 1830s and 1840s. Biology, chemistry, geology, and other sciences all followed suit and claimed their independence. William Whewell, the English polymath, coined the term “scientist” in 1833 to describe this new breed of specialized investigator. The natural philosopher, who once roamed freely across domains of inquiry, was replaced by the disciplinary expert.

This specialization brought clear benefits in rigor, empirical precision, professional standards, reproducible results, and overall compounding progress within a field. The Enlightenment project, which gave us the scientific method, created the conditions for science without metaphysical commitments. It enacted a great separation: the divorce of “is” from “ought,” of describing nature from prescribing values, of technical capability from ethical responsibility. Science would tell us what is, philosophy would worry about what ought to be, and these two would rarely converse.

The End of Explanation

But something stranger happened in this transition than we realize. The scientific method didn’t just organize knowledge differently; it made a promise: Give us your mysteries, and we will return explanations.

Bacon’s empiricism, Descartes’ systematic doubt, and the Royal Society’s “Nullius in verba” all rested on the single assumption that we could break things down, understand their mechanisms, and explain how they work. Reductionism was both method and guarantee. We would never need to take anyone’s word for it because we could see for ourselves.

AI breaks this bargain.

When a large language model generates text, or a neural network identifies a tumor, or an AI system recommends a prison sentence, we often cannot explain why. Looking harder doesn’t solve the issue, because the system itself operates through billions of weighted connections that resist today’s comprehension. We have moved from an era of Science—understanding mechanisms through reduction—back to an era of Oracles. We consult a black box that speaks truth but offers no reasons.

“Nullius in verba” is dead. We are taking AI’s word for it.

This isn’t merely a technical problem for AI safety researchers. It is our epistemic predicament. We are building our future on tools we do not understand, making decisions we cannot explain, trusting outputs we cannot verify. The Enlightenment project rested on the principle that human reason could grasp the workings of nature. What happens when our most powerful tools exceed the grasp of reason itself?

Natural philosophy may be the only valid framework for a world with tools we do not understand.

Beyond the Technical

Today, we find ourselves in a time where we can build powerful AI systems without clear principles to guide us.

I have always resisted the fragmentation of disciplines. My soul craves inspiration from seemingly disconnected ideas coming together; my mind gets more creative and sharper at the intersection of domains; and my identity rejects a single label, the reductive “I am an investor.” This propelled me from mathematics to medicine, from surgical practice to strategy, from investing to deep technology. I see each transition as an act of intellectual synthesis, a bridge built rather than a path forsaken.

And yet the world has been telling me something different. It demanded specialization. Focus. Spending years to hone a specific skill set. There is often a dogma that to venture beyond one’s domain is to waste time, to dilute one’s contribution, to risk career progress.

History offers a different perspective. But we must be honest about what made it work. Yes, natural philosophers blended “art and science,” but they also merged physics and theology.

Newton spent more time on biblical chronology and alchemy than on physics. Leibniz believed his calculus revealed the language God used to write the universe. They were “whole” because they believed in a unified Divine Order—a cosmos with telos, with purpose, given by a Creator.

You cannot simply “return” to Natural Philosophy without confronting what held it together. We fractured knowledge as we killed the shared idea of God and Purpose.

And so we need to ask: Can we have a unified Natural Philosophy in a secular, materialist world? If we want “Wholeness,” what is the glue? If it’s not God, what is it?

Many answer: “Human Flourishing.” But what is that? Autonomy? The removal of suffering? The presence of meaning? These questions aren’t technical ones. They are the deep questions of moral philosophy, and we are encoding answers to them, often unconsciously, into every AI system we build.

And yet AI itself emerged from an era of extreme specialization. The people building it are products of our hyperspecialized society. They possess extraordinary technical capability but often limited philosophical grounding in questions of human flourishing, ethical reasoning, or the nature of autonomy and agency.

This matters because AI systems could be used for critical moments, from criminal sentencing to policy decisions on acceptable freedoms, and even how we handle interstate conflict. Algorithmic recommendations curate our information ecosystems, shaping not only what we know but what we desire and believe. “Can machines think?” becomes “Can humans still choose freely?”

If we continue building AI systems guided only by technical capabilities and market incentives without reflection on human autonomy, without ethical frameworks for human flourishing, we risk creating a world where humans are increasingly obsolete not because machines can think, but because humans have ceased to choose, to experiment, to author.

The Alchemist’s Return

Perhaps we should reframe the narrative entirely. Science didn’t just “leave” Natural Philosophy, it repressed it to gain power.

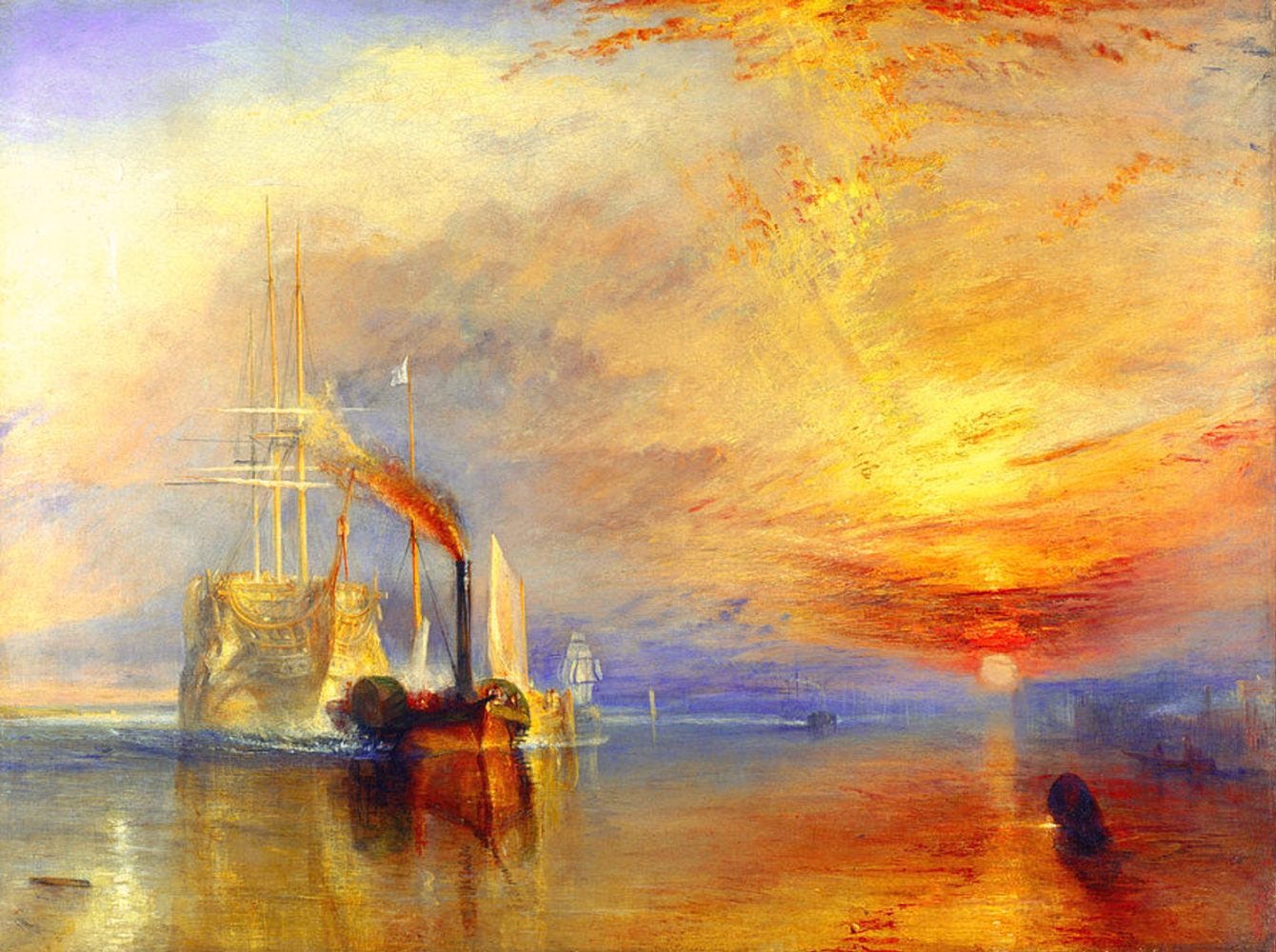

Before chemistry, there was alchemy. The alchemists wanted exactly what we want now: material transformation fused with spiritual transformation. We think of the aim of transmutation of lead into gold as being about wealth, but it was also about perfecting matter and perfecting the soul simultaneously. The Philosopher’s Stone and the creation of the Homunculus (artificial life) were projects of wholeness.

AI is the return to the alchemist’s world.

We are creating artificial minds: the Homunculus realized. We are building systems that generate infinite variations of text, images, video, code. We are doing this not through spiritual discipline but through compute clusters and optimization algorithms. We have the alchemist’s ambitions married to the industrialist’s scale.

We have returned to consulting oracles, except now they run on GPUs. It’s the holistic, mythical, magical worldview of the pre-scientific past—the world where alchemists consulted oracles and accepted mysteries—but we are doing it with tools powerful enough to reshape civilization.

Chasing my daughter through the Science Museum, I glimpsed “Natural Philosophy” on that glass panel and felt nostalgia, but also vertigo.

The future may indeed belong to natural philosophers, those who can hold together technical mastery and ethical wisdom, mechanism and meaning, power and purpose.

But let us be clear about what this return demands. It demands we answer the questions that the fracturing of knowledge cordoned off: What is the good? What is human nature? What is sacred? These are no longer abstract philosophy, but concrete commitments we encode into systems that will shape billions of lives.

The fragmentation of Natural Philosophy was productive and necessary for its time. The specialization of science gave us extraordinary power. But we now face choices that specialization cannot solve, and oracles we cannot explain. We are building a future where humans risk becoming obsolete not because machines can think, but because humans could cease to choose, to question, to author their own existence.

We are no longer in the age of Science. We have become alchemists again, whether we admit it or not. Natural Philosophy may have been forgotten, but the age of AI is calling for its return. It is forcing us to face the questions we tried to set aside.

Cosmos Institute is the Academy for Philosopher-Builders, technologists building AI for human flourishing. We run fellowships, fund fast prototypes, and host seminars with institutions like Oxford, Aspen Institute, and Liberty Fund.

> I have always resisted the fragmentation of disciplines...my mind gets more creative and sharper at the intersection of domains...I see each transition as an act of intellectual synthesis, a bridge built rather than a path forsaken.

...History offers a different perspective. But we must be honest about what made it work. Yes, natural philosophers blended “art and science,” but they also merged physics and theology.

Bravo! Fully resonate with this. Also echoed by Samuel Arbesman's "Computation as Philology" https://arbesman.substack.com/p/computation-as-philology

> Philology was a sort of ur-field, in the same way that natural philosophy preceded all of the scientific disciplines that we know and love. Philology was the “queen of the human sciences,” examining the origins and etymologies of words and languages, but in the process ranged over linguistics, history, archaeology, literature, theology, art, and more.

...But as the study of these different humanistic fields expanded and deepened, there was a fracturing and specialization

...As I read about philology, I was struck by similarities to how I think about code and computation...I view computation as a similar kind of universal solvent

Great read - thank you!

I’ve been thinking about this for a while too, and wrote the revival of the polymaths https://open.substack.com/pub/adamcohenhillel/p/the-revival-of-the-polymaths?r=1t34il&utm_medium=ios

- your historical framing of when, why and how the fragmentation of knowledge disciplines happened was a great additional read I haven’t thought of in that way